Releases: open-mmlab/mmpose

MMPose v1.3.2 Release Note

v1.3.2 (12/07/2024)

New Features

- Add center alignments for draw_texts in OpencvBackendVisualizer (#2958)

- Add wflw2coco (#2961)

- Support 300VW Dataset (#3005)

- Add RTMW3D for 3D wholebody pose estimation task (#3037)

Improvements

- In browse dataset : CombinedDataset element are now browse in turn, and image saved into their dataset name folder (#2985)

Bug Fixes

- Fix loss computation in MSPNHead (#2993)

- Fix bug in inferencer (#2966)

- Make category_id in CocoWholeBodyDataset as numpy.array (#2963)

Documentation

- Add rtmlib examples (#2923)

- Fix readthedocs configuration (#2979)

- Add more detailed comments (#2982)

- Improve documentation folder structure of ExLPose (#2977)

New Contributors

- @AntDum made their first contribution in #2958

- @Yanyirong made their first contribution in #2961

- @drazicmartin made their first contribution in #2977

- @KeqiangSun made their first contribution in #3005

- @jitrc made their first contribution in #3004

- @zgjja made their first contribution in #2963

- @jibranbinsaleem made their first contribution in #3027

- @cpunion made their first contribution in #3026

MMPose v1.3.1 Release Note

Fix the bug when downloading config and checkpoint using mim (see Issue #2918).

MMPose v1.3.0 Release Note

RTMO

We are exited to release RTMO:

- RTMO is the first one-stage pose estimation method that achieves both high accuracy and real-time speed.

- It performs best on crowded scenes. RTMO achieves 83.8% AP on the CrowdPose test set.

- RTMO is easy to run for inference and deployment. It does not require an extra human detector.

- Try it online with this demo by choosing

rtmo | body. - The paper is available on arXiv.

Improved RTMW

We have released additional RTMW models in various sizes:

| Config | Input Size | Whole AP | Whole AR | FLOPS (G) |

|---|---|---|---|---|

| RTMW-m | 256x192 | 58.2 | 67.3 | 4.3 |

| RTMW-l | 256x192 | 66.0 | 74.6 | 7.9 |

| RTMW-x | 256x192 | 67.2 | 75.2 | 13.1 |

| RTMW-l | 384x288 | 70.1 | 78.0 | 17.7 |

| RTMW-x | 384x288 | 70.2 | 78.1 | 29.3 |

The hand keypoint detection accuracy has been notably improved.

Pose Anything

We are glad to support the inference for the category-agnostic pose estimation method PoseAnything!

You can now specify ANY keypoints you want the model to detect, without needing extra training. Under the project folder:

- Download the pretrained model

- Run:

python demo.py --support [path_to_support_image] --query [path_to_query_image] --config configs/demo_b.py --checkpoint [path_to_pretrained_ckpt]

- Thanks to the author of PoseAnything (@orhir) for supporting their excellent work!

New Datasets

We have added support for two new datasets:

(CVPR 2023) ExLPose

ExLPose builds a new dataset of real low-light images with accurate pose labels. It can be helpful on tranining a pose estimation model working under extreme light conditions.

- Thanks @Yang-Changhui for helping with the integration of ExLPose!

- This is the task of our OpenMMLabCamp, if you also wish to contribute code to us, feel free to refer to this link to pick up the task!

(ICCV 2023) H3WB

H3WB (Human3.6M 3D WholeBody) extends the Human3.6M dataset with 3D whole-body annotations using the COCO wholebody skeleton. This dataset enables more comprehensive 3D pose analysis and benchmarking for whole-body methods.

- Supported by @xiexinch.

Contributors

@Tau-J

@Ben-Louis

@xiexinch

@Yang-Changhui

@orhir

@RFYoung

@yao5401

@icynic

@Jendker

@willyfh

@jit-a3

@Ginray

MMPose v1.2.0 Release Note

RTMW

We are excited to release the alpha version of RTMW:

- The first whole-body pose estimation model with accuracy exceeding 70 AP on COCO-Wholebody benchmark. RTMW-x achieves 70.2 AP.

- More accurate hand details for pose-guided image generation, gesture recognition, and human-computer interaction, etc.

- Compatible with

dw_openpose_fullpreprocessor in sd-webui-controlnet - Try it online with this demo by choosing wholebody(rtmw).

- The technical report will be released soon.

New Algorithms

We are glad to support the following new algorithms:

- (ICCV 2023) MotionBERT

- (ICCVW 2023) DWPose

- (ICLR 2023) EDPose

- (ICLR 2022) Uniformer

(ICCVW 2023) DWPose

We are glad to support the two-stage distillation method DWPose, which achieves the new SOTA performance on COCO-WholeBody.

- Since DWPose is the distilled model of RTMPose, you can directly load the weights of DWPose into RTMPose.

- DWPose has been supported in sd-webui-controlnet.

- You can also try DWPose online with this demo by choosing wholebody(dwpose).

Here is a guide to train DWPose:

-

Train DWPose with the first stage distillation

bash tools/dist_train.sh configs/wholebody_2d_keypoint/dwpose/ubody/s1_dis/rtmpose_x_dis_l_coco-ubody-384x288.py 8

-

Transfer the S1 distillation models into regular models

# first stage distillation python pth_transfer.py $dis_ckpt $new_pose_ckpt

-

Train DWPose with the second stage distillation

bash tools/dist_train.sh configs/wholebody_2d_keypoint/dwpose/ubody/s2_dis/dwpose_l-ll_coco-ubody-384x288.py 8

-

Transfer the S2 distillation models into regular models

# second stage distillation python pth_transfer.py $dis_ckpt $new_pose_ckpt --two_dis

- Thanks @yzd-v for helping with the integration of DWPose!

(ICCV 2023) MotionBERT

MotionBERT is the new SOTA method of Monocular 3D Human Pose Estimation on Human3.6M.

You can conviently try MotionBERT via the 3D Human Pose Demo with Inferencer:

python demo/inferencer_demo.py tests/data/coco/000000000785.jpg \

--pose3d human3d --vis-out-dir vis_results/human3d- Supported by @LareinaM

(ICLR 2023) EDPose

We support ED-Pose, an end-to-end framework with Explicit box Detection for multi-person Pose estimation. ED-Pose re-considers this task as two explicit box detection processes with a unified representation and regression supervision. In general, ED-Pose is conceptually simple without post-processing and dense heatmap supervision.

The checkpoint is converted from the official repo. The training of EDPose is not supported yet. It will be supported in the future updates.

You can conviently try EDPose via the 2D Human Pose Demo with Inferencer:

python demo/inferencer_demo.py tests/data/coco/000000197388.jpg \

--pose2d edpose_res50_8xb2-50e_coco-800x1333 --vis-out-dir vis_results- Thanks @LiuYi-Up for helping with the integration of EDPose!

- This is the task of our OpenMMLabCamp, if you also wish to contribute code to us, feel free to refer to this link to pick up the task!

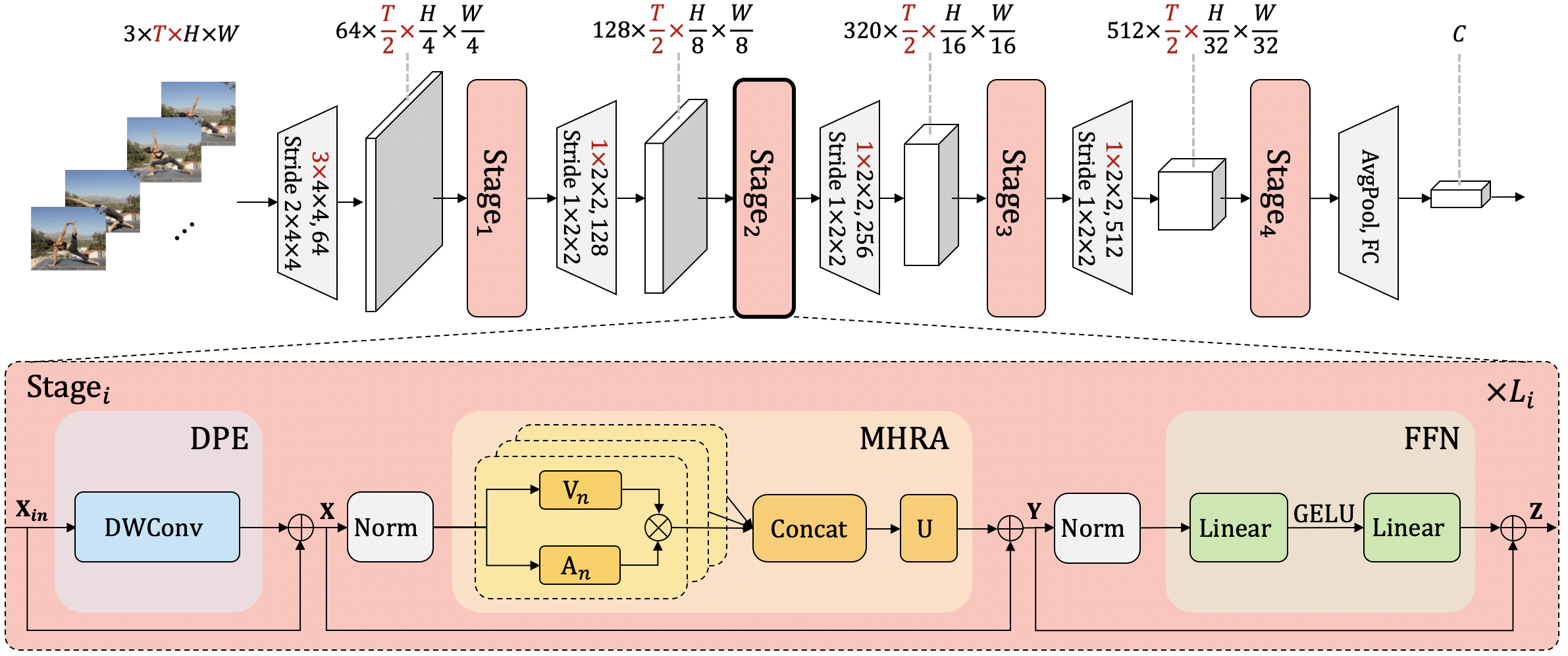

(ICLR 2022) Uniformer

In projects, we implement a topdown heatmap based human pose estimator, utilizing the approach outlined in UniFormer: Unifying Convolution and Self-attention for Visual Recognition (TPAMI 2023) and UniFormer: Unified Transformer for Efficient Spatiotemporal Representation Learning (ICLR 2022).

- Thanks @xin-li-67 for helping with the integration of Uniformer!

- This is the task of our OpenMMLabCamp, if you also wish to contribute code to us, feel free to refer to this link to pick up the task!

New Datasets

We have added support for two new datasets:

(CVPR 2023) UBody

UBody can boost 2D whole-body pose estimation and controllable image generation, especially for in-the-wild hand keypoint detection.

- Supported by @xiexinch

300W-LP

300W-LP contains the synthesized large-pose face images from 300W.

- Thanks @Yang-Changhui for helping with the integration of 300W-LP!

- This is the task of our OpenMMLabCamp, if you also wish to contribute code to us, feel free to refer to this link to pick up the task!

Contributors

MMPose v1.1.0 Release Note

New Datasets

We are glad to support 3 new datasets:

- (CVPR 2023) Human-Art

- (CVPR 2022) Animal Kingdom

- (AAAI 2020) LaPa

(CVPR 2023) Human-Art

Human-Art is a large-scale dataset that targets multi-scenario human-centric tasks to bridge the gap between natural and artificial scenes.

Contents of Human-Art:

- 50,000 images including human figures in 20 scenarios (5 natural scenarios, 3 2D artificial scenarios, and 12 2D artificial scenarios)

- Human-centric annotations include human bounding box, 21 2D human keypoints, human self-contact keypoints, and description text

- baseline human detector and human pose estimator trained on the joint of MSCOCO and Human-Art

Models trained on Human-Art:

Thanks @juxuan27 for helping with the integration of Human-Art!

(CVPR 2022) Animal Kingdom

Animal Kingdom provides multiple annotated tasks to enable a more thorough understanding of natural animal behaviors.

Results comparison:

| Arch | Input Size | PCK(0.05) Ours | Official Repo | Paper |

|---|---|---|---|---|

| P1_hrnet_w32 | 256x256 | 0.6323 | 0.6342 | 0.6606 |

| P2_hrnet_w32 | 256x256 | 0.3741 | 0.3726 | 0.393 |

| P3_mammals_hrnet_w32 | 256x256 | 0.571 | 0.5719 | 0.6159 |

| P3_amphibians_hrnet_w32 | 256x256 | 0.5358 | 0.5432 | 0.5674 |

| P3_reptiles_hrnet_w32 | 256x256 | 0.51 | 0.5 | 0.5606 |

| P3_birds_hrnet_w32 | 256x256 | 0.7671 | 0.7636 | 0.7735 |

| P3_fishes_hrnet_w32 | 256x256 | 0.6406 | 0.636 | 0.6825 |

For more details, see this page

Thanks @Dominic23331 for helping with the integration of Animal Kingdom!

(AAAI 2020) LaPa

Landmark guided face Parsing dataset (LaPa) consists of more than 22,000 facial images with abundant variations in expression, pose and occlusion, and each image of LaPa is provided with an 11-category pixel-level label map and 106-point landmarks.

Supported by @Tau-J

New Config Type

MMEngine introduced the pure Python style configuration file:

- Support navigating to base configuration file in IDE

- Support navigating to base variable in IDE

- Support navigating to source code of class in IDE

- Support inheriting two configuration files containing the same field

- Load the configuration file without other third-party requirements

Refer to the tutorial for more detailed usages.

We provided some examples here. Also, new config type of YOLOX-Pose is supported here.

Feel free to try this new feature and give us your feedback!

Improved RTMPose

We combined public datasets and released more powerful RTMPose models:

- 17-kpt and 26-kpt body models

- 21-kpt hand models

- 106-kpt face models

List of examples to deploy RTMPose:

- RTMPose-Deploy @HW140701 @Dominic23331

- RTMPose-Deploy is a C++ code example for RTMPose localized deployment.

- RTMPose inference with ONNXRuntime (Python) @IRONICBo

- This example shows how to run RTMPose inference with ONNXRuntime in Python.

- PoseTracker Android Demo

- PoseTracker Android Demo Prototype based on mmdeploy.

Check out this page to know more.

Supported by @Tau-J

3D Pose Lifter Refactory

We have migrated SimpleBaseline3D and VideoPose3D into MMPose v1.1.0. Users can easily use Inferencer and body3d demo to conduct inference.

Below is an example of how to use Inferencer to predict 3d pose:

python demo/inferencer_demo.py tests/data/coco/000000000785.jpg \

--pose3d human3d --vis-out-dir vis_results/human3d \

--rebase-keypoint-heightVideo result:

Supported by @LareinaM

Inference Speed-up & Webcam Inference

We have made a lot of improvements to our demo scripts:

- Much higher inference speed

- OpenCV-backend visualizer

- All demos support inference with webcam

Take topdown_demo_with_mmdet.py as example, you can conduct inference with webcam by specifying --input webcam:

# inference with webcam

python demo/topdown_demo_with_mmdet.py \

projects/rtmpose/rtmdet/person/rtmdet_nano_320-8xb32_coco-person.py \

https://download.openmmlab.com/mmpose/v1/projects/rtmpose/rtmdet_nano_8xb32-100e_coco-obj365-person-05d8511e.pth \

projects/rtmpose/rtmpose/body_2d_keypoint/rtmpose-m_8xb256-420e_coco-256x192.py \

https://download.openmmlab.com/mmpose/v1/projects/rtmposev1/rtmpose-m_simcc-aic-coco_pt-aic-coco_420e-256x192-63eb25f7_20230126.pth \

--input webcam \

--showSupported by @Ben-Louis and @LareinaM

New Contributors

- @xin-li-67 made their first contribution in #2205

- @irexyc made their first contribution in #2216

- @lu-minous made their first contribution in #2225

- @FishBigOcean made their first contribution in #2286

- @ATang0729 made their first contribution in #2201

- @HW140701 made their first contribution in #2316

- @IRONICBo made their first contribution in #2323

- @shuheilocale made their first contribution in #2340

- @Dominic23331 made their first contribution in #2139

- @notplus made their first contribution in #2365

- @juxuan27 made their first contribution in #2304

- @610265158 made their first contribution in #2366

- @CescMessi made their first contribution in #2385

- @huangjiyi made their first contribution in #2467

- @Billccx made their first contribution in #2417

- @mareksubocz made their first contribution in #2474

Full Changelog: v1.0.0...v1.1.0

MMPose V1.0.0 Release

Highlights

We are excited to announce the release of MMPose 1.0.0 as a part of the OpenMMLab 2.0 project! MMPose 1.0.0 introduces an updated framework structure for the core package and a new section called "Projects". This section showcases a range of engaging and versatile applications built upon the MMPose foundation.

In this latest release, we have significantly refactored the core package's code to make it clearer, more comprehensible, and disentangled. This has resulted in improved performance for several existing algorithms, ensuring that they now outperform their previous versions. Additionally, we have incorporated some cutting-edge algorithms, such as SimCC and ViTPose, to further enhance the capabilities of MMPose and provide users with a more comprehensive and powerful toolkit.

The new "Projects" section serves as an essential addition to MMPose, created to foster innovation and collaboration among users. This section offers the following attractive features:

- Flexible code contribution: Unlike the core package, the "Projects" section allows for a more flexible environment for code contributions, enabling faster integration of state-of-the-art models and features.

- Showcase of diverse applications: Explore a wide variety of projects built upon the MMPose foundation, such as deployment examples and combinations of pose estimation with other tasks.

- Fostering creativity and collaboration: Encourages users to experiment, build upon the MMPose platform, and share their innovative applications and techniques, creating an active community of developers and researchers.

Discover the possibilities within the "Projects" section and join the vibrant MMPose community in pushing the boundaries of pose estimation applications!

Exciting Features

RTMPose

RTMPose is a high-performance real-time multi-person pose estimation framework designed for practical applications. RTMPose offers high efficiency and accuracy, with various models achieving impressive AP scores on COCO and fast inference speeds on both CPU and GPU. It is also designed for easy deployment across various platforms and backends, such as ONNX, TensorRT, ncnn, OpenVINO, Linux, Windows, NVIDIA Jetson, and ARM. Additionally, it provides a pipeline inference API and SDK for Python, C++, C#, Java, and other languages.

[Project][Model Zoo][Tech Report]

Inferencer

In this release, we introduce the MMPoseInferencer, a versatile API for inference that accommodates multiple input types. The API enables users to easily specify and customize pose estimation models, streamlining the process of performing pose estimation with MMPose.

Usage:

python demo/inferencer_demo.py ${INPUTS} --pose2d ${MODEL} [OPTIONS]- The INPUTS can be an image or video path, an image folder, or a webcam feed. You no longer need different demo scripts for various input types.

- The inferencer supports specifying models with aliases such as 'human', 'animal', and 'face'. These aliases correspond to the advanced RTMPose models, which are fast and accurate. If you're unsure which model to use, the default one should suffice.

Example:

python demo/inferencer_demo.py tests/data/crowdpose --pose2d wholebodyAll images located in the tests/data/crowdpose folder will be processed using RTMPose. Here are the visualization results:

For more details about Inferencer, please refer to https://mmpose.readthedocs.io/en/latest/user_guides/inference.html

- Supported by @Ben-Louis in #1969

Visualization Improvements

In MMPose 1.0.0, we have enhanced the visualization capabilities for a more intuitive and insightful user experience, enabling a deeper understanding of the model's performance and keypoint predictions, and streamlining the process of fine-tuning and optimizing pose estimation models. The new visualization tool facilitates:

- Heatmap Visualization: Supporting both 1D and 2D heatmaps, users can easily visualize the distribution of keypoints and their confidence levels, providing a clearer understanding of the model's keypoint predictions.

| 2D Heatmap (ViTPose) | 1D Heatmap (RTMPose) |

|---|---|

|

|

- Inference Result Visualization during Training and Testing: Users now can visualize pose estimation results in validation or testing phases, quickly identify and address potential issues for faster model iteration and improved performance. For guidance on setting up visualization during training and testing, please refer to train and test.

- Support SimCC visualization by @jack0rich in #1912

- Support OpenPose-style visualization by @Zheng-LinXiao in #2115

MMPose for AIGC

We are excited to introduce the MMPose4AIGC project, a powerful tool that allows users to extract human pose information using MMPose and seamlessly integrate it with the T2I Adapter demo to generate stunning AI-generated images. The project makes it easy for users to generate both OpenPose-style and MMPose-style skeleton images, which can then be used as inputs in the T2I Adapter demo to create captivating AI-generated content based on pose information. Discover the potential of pose-guided image generation with the MMPose4AIGC project and elevate your AI-generated content to new heights!

YOLOX-Pose

YOLOX-Pose is a YOLO-based human detector and pose estimator, leveraging the methodology described in YOLO-Pose: Enhancing YOLO for Multi Person Pose Estimation Using Object Keypoint Similarity Loss (CVPRW 2022). With its lightweight and fast performance, this model is ideally suited for handling crowded scenes.

[Project][Paper]

- Supported by @Ben-Louis in #2020

Optimizations

In addition to new features, MMPose 1.0.0 delivers key optimizations for an enhanced user experience. With PyTorch 2.0 compatibility and a streamlined Codec module, you'll enjoy a more efficient and user-friendly pose estimation workflow like never before.

PyTorch 2.0 Compatibility

MMPose 1.0.0 is now compatible with PyTorch 2.0, ensuring that users can leverage the latest features and performance improvements offered by the PyTorch 2.0 framework when using MMPose. With the integration of inductor, users can expect faster model speeds. The table below shows several example models:

| Model | Training Speed | Memory |

|---|---|---|

| ViTPose-B | 29.6% ↑ (0.931 → 0.655) | 10586 → 10663 |

| ViTPose-S | 33.7% ↑ (0.563 → 0.373) | 6091 → 6170 |

| HRNet-w32 | 12.8% ↑ (0.553 → 0.482) | 9849 → 10145 |

| HRNet-w48 | 37.1% ↑ (0.437 → 0.275) | 7319 → 7394 |

| RTMPose-t | 6.3% ↑ (1.533 → 1.437) | 6292 → 6489 |

| RTMPose-s | 13.1% ↑ (1.645 → 1.430) | 9013 → 9208 |

New Design: Codecs

In pose estimation tasks, various algorithms require different target formats, such as normalized coordinates, vectors, and heatmaps. MMPose 1.0.0 introduces a unified Codec module to streamline the encoding and decoding processes:

- Encoder: Transforms input image space coordinates into required target formats.

- Decoder: Transforms model outputs into input image space coordinates, performing the inverse operation of the encoder.

This integration offers a more coherent and user-friendly experience when working with different pose estimation algorithms. For a detailed introduction to codecs, including concrete examples, please refer to our guide on learn about Codecs

Bug Fixes

- [Fix] fix readthedocs compiling requirements by @ly015 in #2071

- [Fix] fix online documentation by @ly015 in #2073

- [Fix] fix online docs by @ly015 in #2075

- [Fix] fix warnings when falling back to mmengine registry by @ly015 in #2082

- [Fix] fix CI by @ly015 in #2088

- [Fix] fix model names in metafiles by @Ben-Louis ...

MMPose V1.0.0rc1 Release

Highlights

- Release RTMPose, a high-performance real-time pose estimation algorithm with cross-platform deployment and inference support. See details at the project page

- Support several new algorithms: ViTPose (arXiv'2022), CID (CVPR'2022), DEKR (CVPR'2021)

- Add Inferencer, a convenient inference interface that perform pose estimation and visualization on images, videos and webcam streams with only one line of code

- Introduce Project, a new form for rapid and easy implementation of new algorithms and features in MMPose, which is more handy for community contributors

New Features

- Support RTMPose (#1971, #2024, #2028, #2030, #2040, #2057)

- Support Inferencer (#1969)

- Support ViTPose (#1876, #2056, #2058, #2065)

- Support CID (#1907)

- Support DEKR (#1834, #1901)

- Support training with multiple datasets (#1767, #1930, #1938, #2025)

- Add project to allow rapid and easy implementation of new models and features (#1914)

Improvements

- Improve documentation quality (#1846, #1858, #1872, #1899, #1925, #1945, #1952, #1990, #2023, #2042)

- Support visualizing keypoint indices (#2051)

- Support OpenPose style visualization (#2055)

- Accelerate image transpose in data pipelines with tensor operation (#1976)

- Support auto-import modules from registry (#1961)

- Support keypoint partition metric (#1944)

- Support SimCC 1D-heatmap visualization (#1912)

- Support saving predictions and data metainfo in demos (#1814, #1879)

- Support SimCC with DARK (#1870)

- Remove Gaussian blur for offset maps in UDP-regress (#1815)

- Refactor encoding interface of Codec for better extendibility and easier configuration (#1781)

- Support evaluating CocoMetric without annotation file (#1722)

- Improve unit tests (#1765)

Bug Fixes

- Fix repeated warnings from different ranks (#2053)

- Avoid frequent scope switching when using mmdet inference api (#2039)

- Remove EMA parameters and message hub data when publishing model checkpoints (#2036)

- Fix metainfo copying in dataset class (#2017)

- Fix top-down demo bug when there is no object detected (#2007)

- Fix config errors (#1882, #1906, #1995)

- Fix image demo failure when GUI is unavailable (#1968)

- Fix bug in AdaptiveWingLoss (#1953)

- Fix incorrect importing of RepeatDataset which is deprecated (#1943)

- Fix bug in bottom-up datasets that ignores images without instances (#1752, #1936)

- Fix upstream dependency issues (#1867, #1921)

- Fix evaluation issues and update results (#1763, #1773, #1780, #1850, #1868)

- Fix local registry missing warnings (#1849)

- Remove deprecated scripts for model deployment (#1845)

- Fix a bug in input transformation in BaseHead (#1843)

- Fix an interface mismatch with MMDetection in webcam demo (#1813)

- Fix a bug in heatmap visualization that causes incorrect scale (#1800)

- Add model metafiles (#1768)

New Contributors

- @ChenZhenGui made their first contribution in #1800

- @LareinaM made their first contribution in #1845

- @xinxinxinxu made their first contribution in #1843

- @jack0rich made their first contribution in #1912

- @zwfcrazy made their first contribution in #1944

- @LKJacky made their first contribution in #2024

- @tongda made their first contribution in #2028

- @LRuid made their first contribution in #2055

- @Zheng-LinXiao made their first contribution in #2057

Full Changelog: v1.0.0rc0...v1.0.0rc1

MMPose V1.0.0rc0 Release

New Features

- Support 4 light-weight pose estimation algorithms: SimCC (ECCV'2022), Debias-IPR (ICCV'2021), IPR (ECCV'2018), and DSNT (ArXiv'2018) (#1628) @Tau-J

Migrations

- Add Webcam API in MMPose 1.0 (#1638, #1662) @Ben-Louis

- Add codec for Associative Embedding (beta) (#1603) @ly015

Improvements

- Add a colab tutorial for MMPose 1.0 (#1660) @Tau-J

- Add model index in config folder (#1710, #1709, #1627) @ly015, @Tau-J, @Ben-Louis

- Update and improve documentation (#1692, #1656, #1681, #1677, #1664, #1659) @Tau-J, @Ben-Louis, @liqikai9

- Improve config structures and formats (#1651) @liqikai9

Bug Fixes

- Update mmengine version requirements (#1715) @Ben-Louis

- Update dependencies of pre-commit hooks (#1705) @Ben-Louis

- Fix mmcv version in DockerFile (#1704)

- Fix a bug in setting dataset metainfo in configs (#1684) @ly015

- Fix a bug in UDP training (#1682) @liqikai9

- Fix a bug in Dark decoding (#1676) @liqikai9

- Fix bugs in visualization (#1671, #1668, #1657) @liqikai9, @Ben-Louis

- Fix incorrect flops calculation (#1669) @liqikai9

- Fix

tensor.tilecompatibility issue for pytorch 1.6 (#1658) @ly015 - Fix compatibility with

MultilevelPixelData(#1647) @liqikai9

MMPose V0.29.0 Release

Highlights

- Support DEKR, CVPR'2021 (#1693) @JinluZhang1126, @jin-s13, @Ben-Louis

- Support CID, CVPR'2022 (#1604) @kennethwdk, @jin-s13, @Ben-Louis

Improvements

- Improve 3D pose estimation demo with viewpoint & height controlling and enriched input formats (#1481, #1490) @pallgeuer, @liqikai9

- Improve smoothing of 3D video pose estimation (#1479) @darcula1993

- Improve dependency management for smoother installation (#1491) @danielbraun89

- Improve documentation quality (#1680, #1525, #1516, #1513, #1511, #1507, #1506, #1525) @Ben-Louis, @Yulv-git, @jin-s13

Bug Fixes

- Update dependency versions of pre-commit-hooks (#1706, #1678) @Ben-Louis, @liqikai9

- Fix missing data transforms in RLE configs (#1632) @Ben-Louis

- Fix a bug in

fliplr_jointsthat causes error when keypoint visibility has float values (#1589) @walsvid - Fix hsigmoid default parameters (#1575) @Ben-Louis

- Fix a bug in UDP decoding (#1565) @liqikai9

- Fix a bug in pose tracking demo with non-COCO dataset (#1504) @daixinghome

- Fix typos and unused contents in configs (#1499, #1496) @liqikai9

MMPose V1.0.0-beta Release

We are excited to announce the release of MMPose 1.0.0beta.

MMPose 1.0.0beta is the first version of MMPose 1.x, a part of the OpenMMLab 2.0 projects.

Built upon the new training engine.

Highlights

-

New engines. MMPose 1.x is based on MMEngine, which provides a general and powerful runner that allows more flexible customizations and significantly simplifies the entrypoints of high-level interfaces.

-

Unified interfaces. As a part of the OpenMMLab 2.0 projects, MMPose 1.x unifies and refactors the interfaces and internal logics of train, testing, datasets, models, evaluation, and visualization. All the OpenMMLab 2.0 projects share the same design in those interfaces and logics to allow the emergence of multi-task/modality algorithms.

-

More documentation and tutorials. We add a bunch of documentation and tutorials to help users get started more smoothly. Read it here.

Breaking Changes

In this release, we made lots of major refactoring and modifications. Please refer to the migration guide for details and migration instructions.