Nicola Ramdass

Edouard Dufour

OmniPose is a multi-scale framework for multi-person pose estimation. Additionally to a modified HRNet backbone, they proposed the “Waterfall Atrous Spatial Pyramid” module or WASPv2.

They say: "WASPv2 [...] is able to leverage both the larger Field-of-View of the Atrous Spatial Pyramid Pooling configuration and the reduced size of the cascade approach."

Methods of human pose estimation using adversarial networks have yielded good results on relatively simple networks.[2]

In our project, we implemented a similar adversarial network architecture to a more complex model, more specifically the existing OmniPose model to see the effect on the performance.

An advantage of this kind of adversarial method is that it can have a positive impact on the performance of a model without adding computational complexity in the inference process.

The model is compatible without adaptation with the following public datasets:

In the case of COCO, evaluation is based on the Object Keypoint Similarity metric:

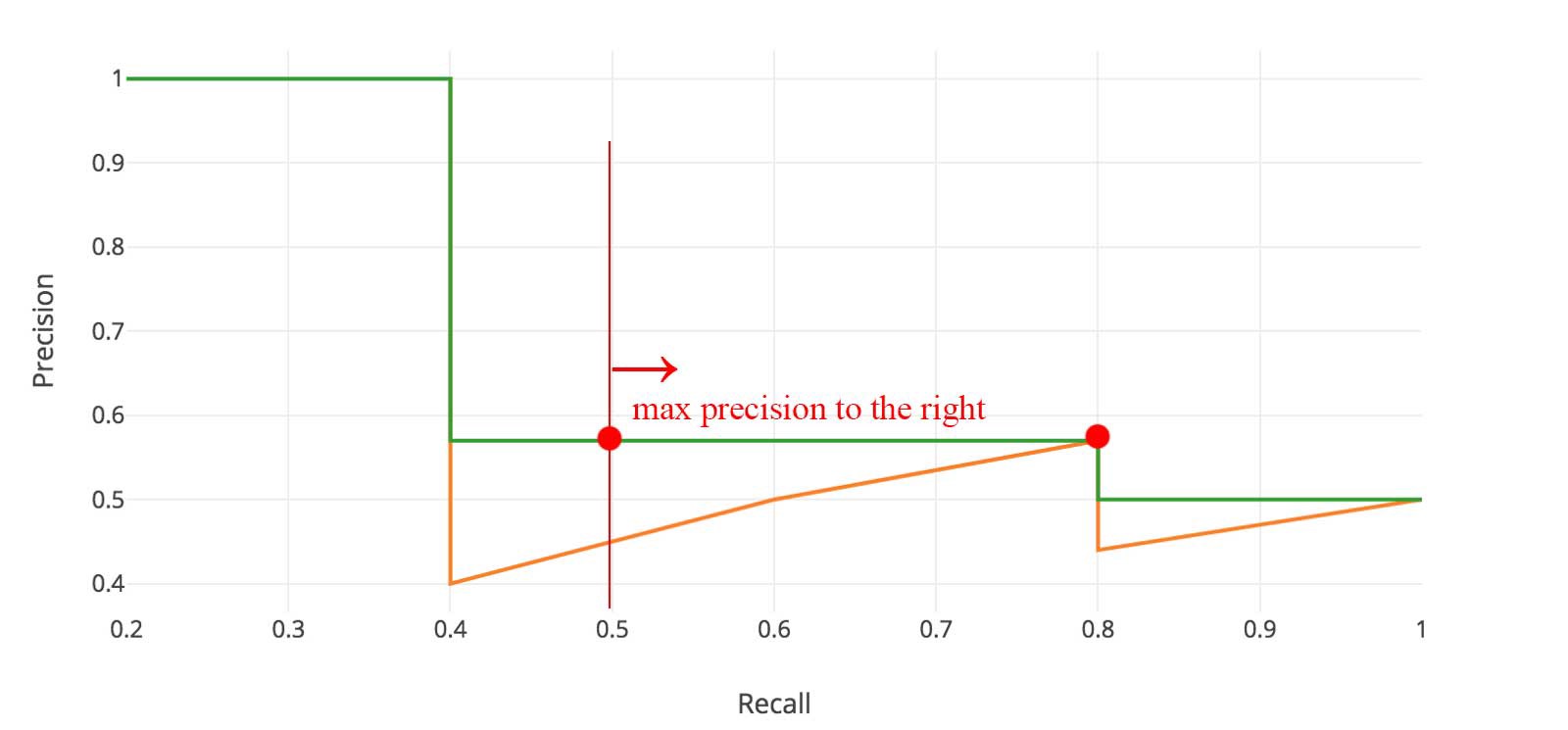

We consider OKS analogous to the intersection over the union, and therefore use it in the computation of precision and recall, by considering true positive if over a certain threshold and false positive if under it.

We then plot the precision/recall curve for a given keypoint, and for each recall point, find the highest precision point to the right.

We report different

First, pure OmniPose (OmniPose) was retrained with 18 epochs to have a more reasonable comparison value since in the original paper, 210 epochs were used. The best performance achieve was an average precision of 0.549.

The OmniPose model was then used as the generator in the netword described above with the discriminator from the Adversarial Pose Net paper (OmniPoseAN). The model was trained from scratch for 18 epochs and achieved an average precision of 0.519. Though slightly lower, the training of the adversarial network was slightly more stable, epoch to epoch as compared to pure OmniPose. This comparison is shown in the graph below for the 18 epochs.

Lastly, a pretrained OmniPose model was loaded into the adversarial network (OmniPoseAN2) and the training was continued for 10 more epochs resulting in an average precision value of 0.594.

Below, three of the same image is shown after being passed through an inference of the different experiments described above. The OmniPose model does the human pose estimation quite well. The OmniPoseAN model seems to perform very poorly although having a similar average precision. The OmniPoseAN2 performs well enough with one misplace keypoint, even though a higher average precision is reported.

Another example for all three models is shown below.

Download the dataset and extract it at {OMNIPOSE_ROOT}/data, as follows:

${OMNIPOSE_ROOT}

|-- data

`-- |-- coco

`-- |-- annotations

| |-- person_keypoints_train2017.json

| `-- person_keypoints_val2017.json

`-- images

|-- train2017.zip

`-- val2017.zip

Download the dataset and extract it at {OMNIPOSE_ROOT}/data, as follows:

${OMNIPOSE_ROOT}

|-- data

`-- |-- mpii

`-- |-- annot

| |-- train.json

| `-- valid.json

`-- images

To train the pure OmniPose model, run the run_train.sh bash file with the selected configuration. In this project, the OmniPose/experiments/coco/omnipose_w48_384x288_train.yaml conifugration is used as a comparison. This bash file calls the train.py file for training.

To train the OmniPose with adversarial training, run the run_train_AN.sh bash file with the selected configuration. To compare to the pure OmniPose, the configuration file OmniPose/experiments/coco/omnipose_w48_384x288_train.yaml is used. This bash file calls the train_AN.py file for training.

In the configuration files, ensure that the argument ROOT to the path where the data is stored.

The weights of the models can be found here.

To run an inference, run the bash file run_demo.sh with the selected configuration, OmniPose/experiments/coco/omnipose_w48_384x288_train.yaml is used. Ensure that the model you wish to infer has a correct path in the configuration file.

Pure OmniPose: /OmniPose/trained/coco/omnipose/omnipose_w48_384x288_train/checkpoint.pth

OmniPose with AN from scratch: /OmniPose/trained_GAN/coco/omnipose/omnipose_w48_384x288_train/checkpoint.pth

OmniPose with AN with pre-trained: /OmniPose/trained_GAN_2/coco/omnipose/omnipose_w48_384x288_train/checkpoint.pth

The inference will save images every 100 images in the folder OmniPose/samples.

The proposed method does not perform very well likely due to several factors such as limited training time and resources, sturcture of the models and maybe hyperparameters. However, the concept has shown to improve performance of a model such as in the OmniPoseAN with the loaded weights. The inferencing function may also have been faulty since many images showed wrong keypoints, despite having a pretty high average precision.

[1] Artacho, Bruno, and Andreas Savakis. "Omnipose: A multi-scale framework for multi-person pose estimation." arXiv preprint arXiv:2103.10180 (2021).

[2] Chen, Yu, et al. "Adversarial posenet: A structure-aware convolutional network for human pose estimation." Proceedings of the IEEE international conference on computer vision. 2017.

OmniPose[1]