+

+

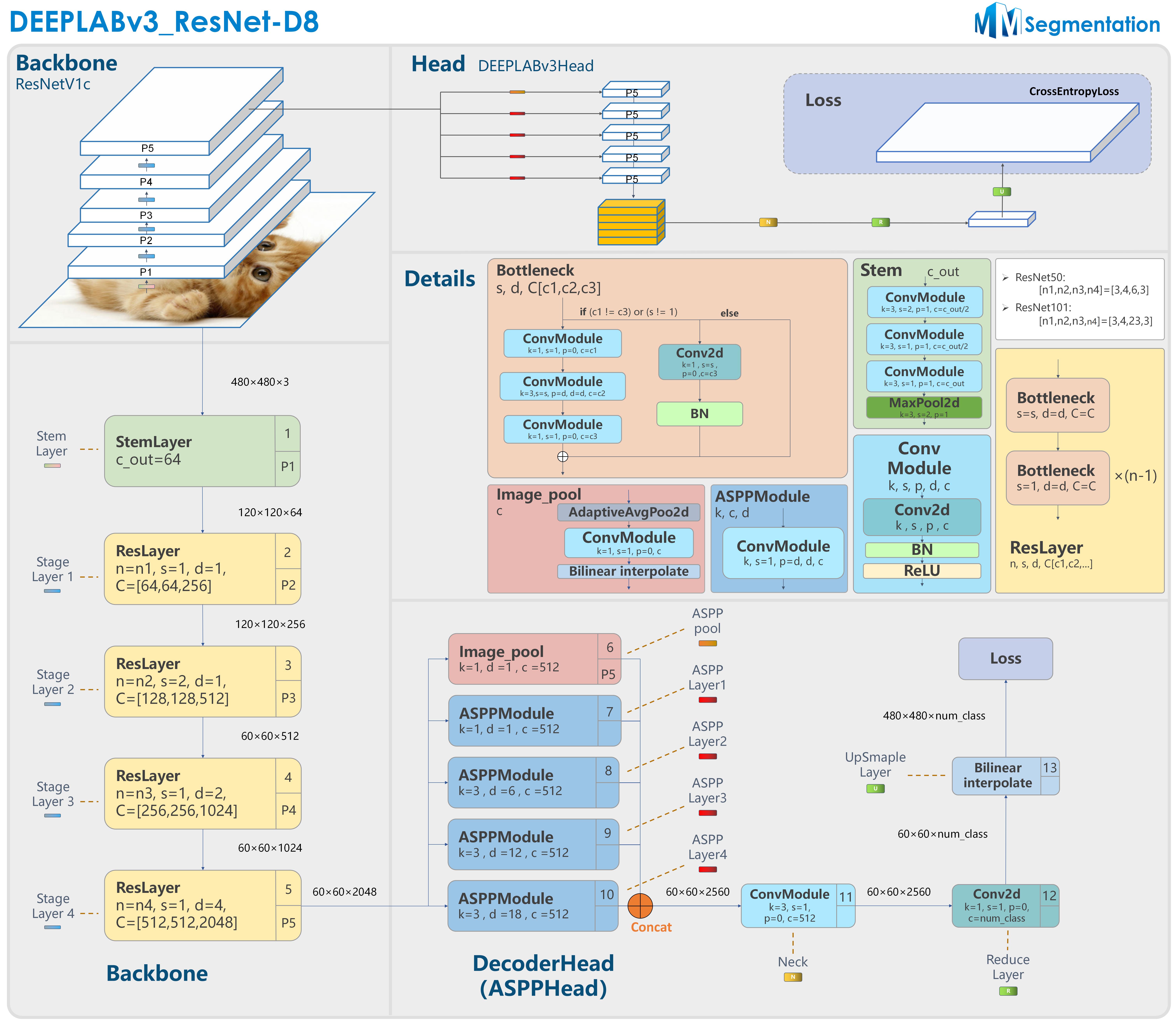

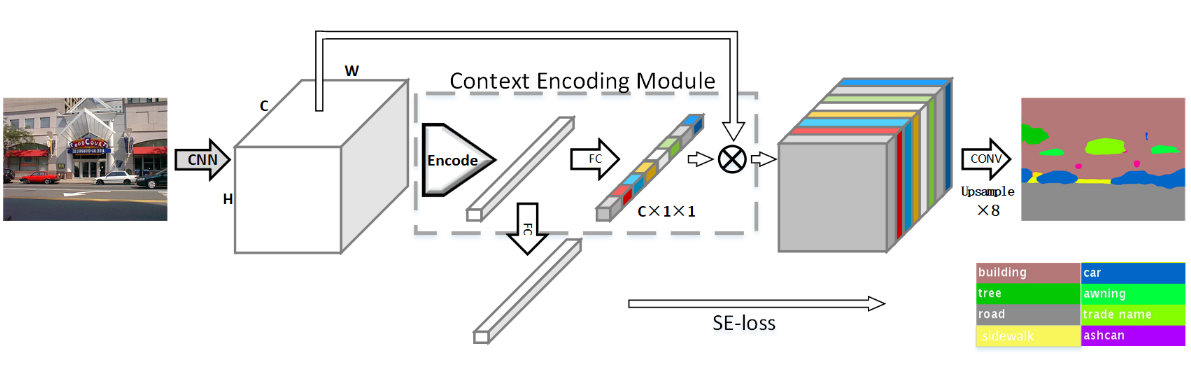

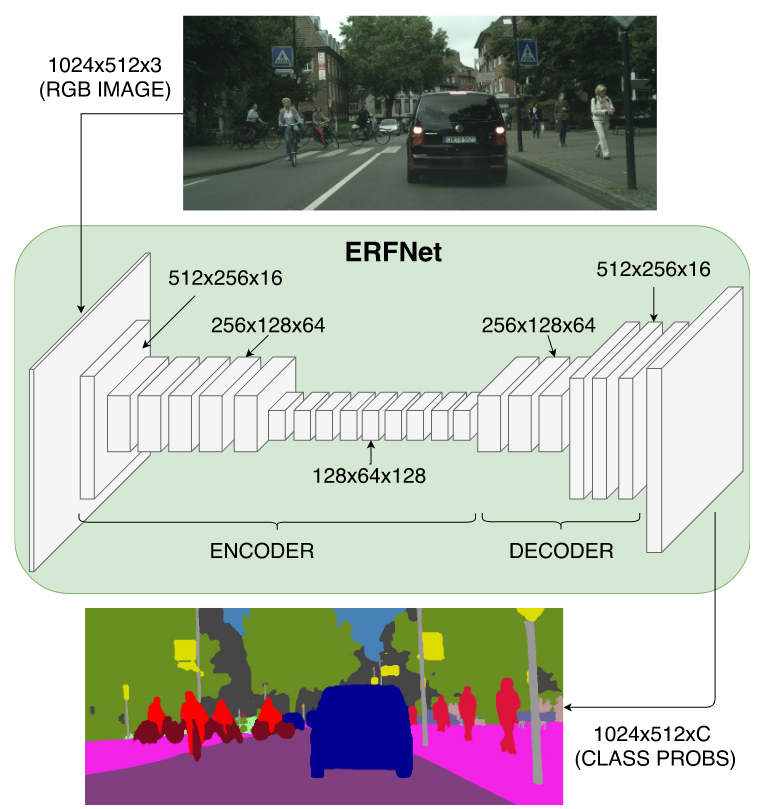

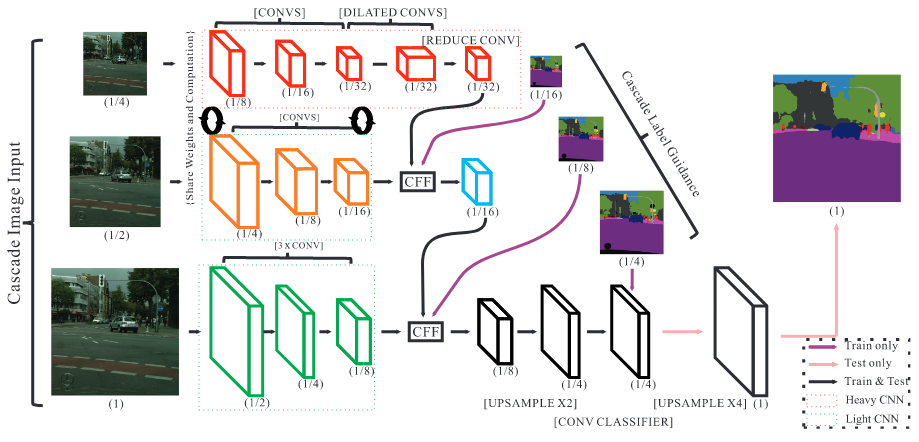

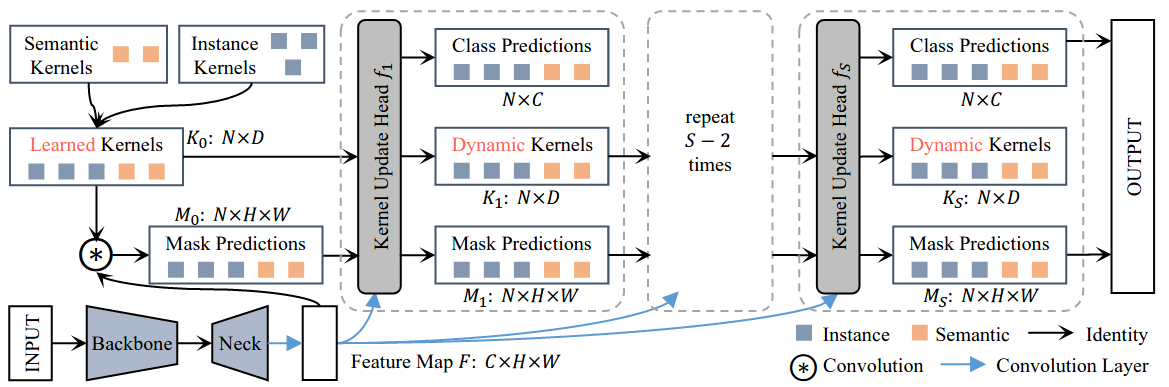

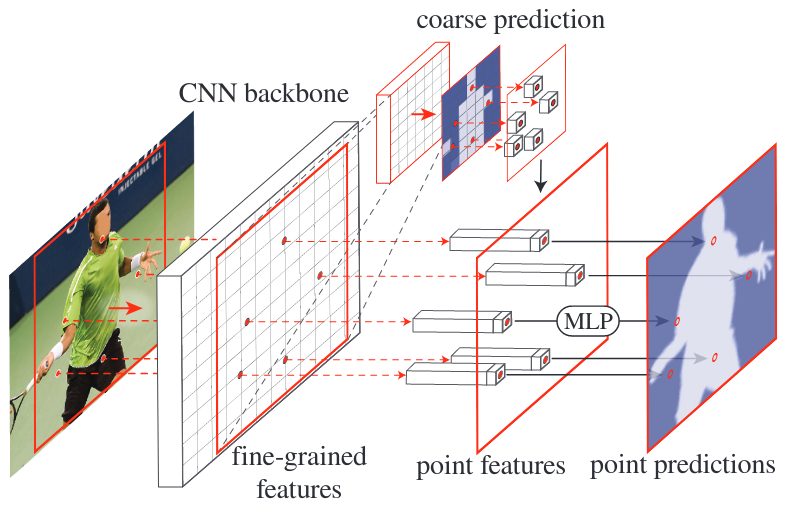

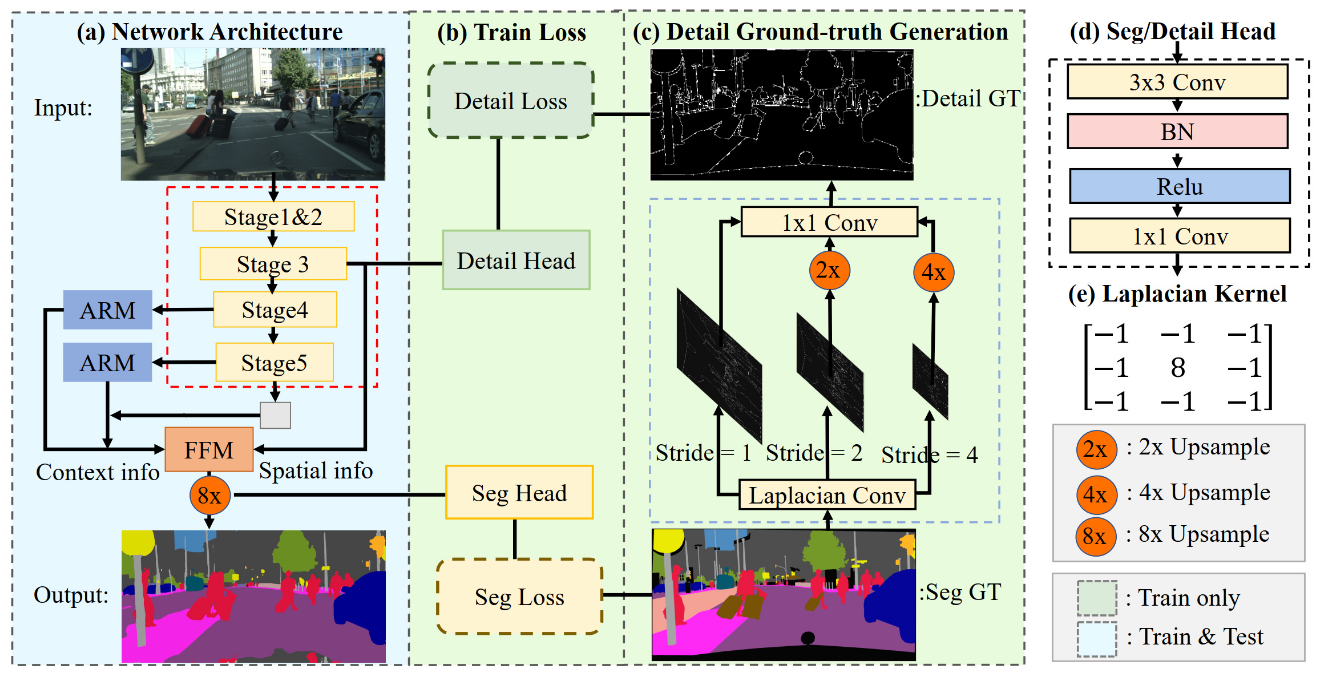

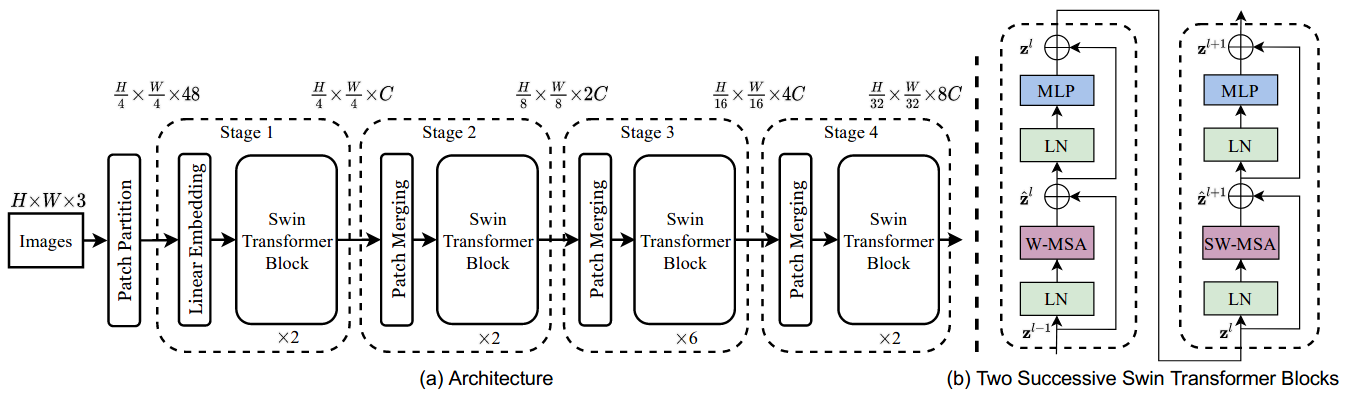

+ +DEEPLABv3_ResNet-D8 model structure

+DEEPLABv3_ResNet-D8 model structure

-## Citation

-

-```bibtext

-@article{chen2017rethinking,

- title={Rethinking atrous convolution for semantic image segmentation},

- author={Chen, Liang-Chieh and Papandreou, George and Schroff, Florian and Adam, Hartwig},

- journal={arXiv preprint arXiv:1706.05587},

- year={2017}

-}

-```

-

## Results and models

### Cityscapes

-| Method | Backbone | Crop Size | Lr schd | Mem (GB) | Inf time (fps) | mIoU | mIoU(ms+flip) | config | download |

-| ---------------- | --------------- | --------- | ------: | -------- | -------------- | ----: | ------------: | ---------------------------------------------------------------------------------------------------------------------------------------------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ |

-| DeepLabV3 | R-50-D8 | 512x1024 | 40000 | 6.1 | 2.57 | 79.09 | 80.45 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r50-d8_4xb2-40k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x1024_40k_cityscapes/deeplabv3_r50-d8_512x1024_40k_cityscapes_20200605_022449-acadc2f8.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x1024_40k_cityscapes/deeplabv3_r50-d8_512x1024_40k_cityscapes_20200605_022449.log.json) |

-| DeepLabV3 | R-101-D8 | 512x1024 | 40000 | 9.6 | 1.92 | 77.12 | 79.61 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d8_4xb2-40k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x1024_40k_cityscapes/deeplabv3_r101-d8_512x1024_40k_cityscapes_20200605_012241-7fd3f799.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x1024_40k_cityscapes/deeplabv3_r101-d8_512x1024_40k_cityscapes_20200605_012241.log.json) |

-| DeepLabV3 | R-50-D8 | 769x769 | 40000 | 6.9 | 1.11 | 78.58 | 79.89 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r50-d8_4xb2-40k_cityscapes-769x769.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_769x769_40k_cityscapes/deeplabv3_r50-d8_769x769_40k_cityscapes_20200606_113723-7eda553c.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_769x769_40k_cityscapes/deeplabv3_r50-d8_769x769_40k_cityscapes_20200606_113723.log.json) |

-| DeepLabV3 | R-101-D8 | 769x769 | 40000 | 10.9 | 0.83 | 79.27 | 80.11 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d8_4xb2-40k_cityscapes-769x769.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_769x769_40k_cityscapes/deeplabv3_r101-d8_769x769_40k_cityscapes_20200606_113809-c64f889f.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_769x769_40k_cityscapes/deeplabv3_r101-d8_769x769_40k_cityscapes_20200606_113809.log.json) |

-| DeepLabV3 | R-18-D8 | 512x1024 | 80000 | 1.7 | 13.78 | 76.70 | 78.27 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r18-d8_4xb2-80k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18-d8_512x1024_80k_cityscapes/deeplabv3_r18-d8_512x1024_80k_cityscapes_20201225_021506-23dffbe2.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18-d8_512x1024_80k_cityscapes/deeplabv3_r18-d8_512x1024_80k_cityscapes-20201225_021506.log.json) |

-| DeepLabV3 | R-50-D8 | 512x1024 | 80000 | - | - | 79.32 | 80.57 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r50-d8_4xb2-80k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x1024_80k_cityscapes/deeplabv3_r50-d8_512x1024_80k_cityscapes_20200606_113404-b92cfdd4.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x1024_80k_cityscapes/deeplabv3_r50-d8_512x1024_80k_cityscapes_20200606_113404.log.json) |

-| DeepLabV3 | R-101-D8 | 512x1024 | 80000 | - | - | 80.20 | 81.21 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d8_4xb2-80k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x1024_80k_cityscapes/deeplabv3_r101-d8_512x1024_80k_cityscapes_20200606_113503-9e428899.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x1024_80k_cityscapes/deeplabv3_r101-d8_512x1024_80k_cityscapes_20200606_113503.log.json) |

-| DeepLabV3 (FP16) | R-101-D8 | 512x1024 | 80000 | 5.75 | 3.86 | 80.48 | - | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d8_4xb2-amp-80k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_fp16_512x1024_80k_cityscapes/deeplabv3_r101-d8_fp16_512x1024_80k_cityscapes_20200717_230920-774d9cec.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_fp16_512x1024_80k_cityscapes/deeplabv3_r101-d8_fp16_512x1024_80k_cityscapes_20200717_230920.log.json) |

-| DeepLabV3 | R-18-D8 | 769x769 | 80000 | 1.9 | 5.55 | 76.60 | 78.26 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r18-d8_4xb2-80k_cityscapes-769x769.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18-d8_769x769_80k_cityscapes/deeplabv3_r18-d8_769x769_80k_cityscapes_20201225_021506-6452126a.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18-d8_769x769_80k_cityscapes/deeplabv3_r18-d8_769x769_80k_cityscapes-20201225_021506.log.json) |

-| DeepLabV3 | R-50-D8 | 769x769 | 80000 | - | - | 79.89 | 81.06 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r50-d8_4xb2-80k_cityscapes-769x769.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_769x769_80k_cityscapes/deeplabv3_r50-d8_769x769_80k_cityscapes_20200606_221338-788d6228.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_769x769_80k_cityscapes/deeplabv3_r50-d8_769x769_80k_cityscapes_20200606_221338.log.json) |

-| DeepLabV3 | R-101-D8 | 769x769 | 80000 | - | - | 79.67 | 80.81 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d8_4xb2-80k_cityscapes-769x769.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_769x769_80k_cityscapes/deeplabv3_r101-d8_769x769_80k_cityscapes_20200607_013353-60e95418.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_769x769_80k_cityscapes/deeplabv3_r101-d8_769x769_80k_cityscapes_20200607_013353.log.json) |

-| DeepLabV3 | R-101-D16-MG124 | 512x1024 | 40000 | 4.7 | - 6.96 | 76.71 | 78.63 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d16-mg124_4xb2-40k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d16-mg124_512x1024_40k_cityscapes/deeplabv3_r101-d16-mg124_512x1024_40k_cityscapes_20200908_005644-67b0c992.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d16-mg124_512x1024_40k_cityscapes/deeplabv3_r101-d16-mg124_512x1024_40k_cityscapes-20200908_005644.log.json) |

-| DeepLabV3 | R-101-D16-MG124 | 512x1024 | 80000 | - | - | 78.36 | 79.84 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d16-mg124_4xb2-80k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d16-mg124_512x1024_80k_cityscapes/deeplabv3_r101-d16-mg124_512x1024_80k_cityscapes_20200908_005644-57bb8425.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d16-mg124_512x1024_80k_cityscapes/deeplabv3_r101-d16-mg124_512x1024_80k_cityscapes-20200908_005644.log.json) |

-| DeepLabV3 | R-18b-D8 | 512x1024 | 80000 | 1.6 | 13.93 | 76.26 | 77.88 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r18b-d8_4xb2-80k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18b-d8_512x1024_80k_cityscapes/deeplabv3_r18b-d8_512x1024_80k_cityscapes_20201225_094144-46040cef.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18b-d8_512x1024_80k_cityscapes/deeplabv3_r18b-d8_512x1024_80k_cityscapes-20201225_094144.log.json) |

-| DeepLabV3 | R-50b-D8 | 512x1024 | 80000 | 6.0 | 2.74 | 79.63 | 80.98 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r50b-d8_4xb2-80k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50b-d8_512x1024_80k_cityscapes/deeplabv3_r50b-d8_512x1024_80k_cityscapes_20201225_155148-ec368954.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50b-d8_512x1024_80k_cityscapes/deeplabv3_r50b-d8_512x1024_80k_cityscapes-20201225_155148.log.json) |

-| DeepLabV3 | R-101b-D8 | 512x1024 | 80000 | 9.5 | 1.81 | 80.01 | 81.21 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101b-d8_4xb2-80k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101b-d8_512x1024_80k_cityscapes/deeplabv3_r101b-d8_512x1024_80k_cityscapes_20201226_171821-8fd49503.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101b-d8_512x1024_80k_cityscapes/deeplabv3_r101b-d8_512x1024_80k_cityscapes-20201226_171821.log.json) |

-| DeepLabV3 | R-18b-D8 | 769x769 | 80000 | 1.8 | 5.79 | 75.63 | 77.51 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r18b-d8_4xb2-80k_cityscapes-769x769.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18b-d8_769x769_80k_cityscapes/deeplabv3_r18b-d8_769x769_80k_cityscapes_20201225_094144-fdc985d9.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18b-d8_769x769_80k_cityscapes/deeplabv3_r18b-d8_769x769_80k_cityscapes-20201225_094144.log.json) |

-| DeepLabV3 | R-50b-D8 | 769x769 | 80000 | 6.8 | 1.16 | 78.80 | 80.27 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r50b-d8_4xb2-80k_cityscapes-769x769.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50b-d8_769x769_80k_cityscapes/deeplabv3_r50b-d8_769x769_80k_cityscapes_20201225_155404-87fb0cf4.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50b-d8_769x769_80k_cityscapes/deeplabv3_r50b-d8_769x769_80k_cityscapes-20201225_155404.log.json) |

-| DeepLabV3 | R-101b-D8 | 769x769 | 80000 | 10.7 | 0.82 | 79.41 | 80.73 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101b-d8_4xb2-80k_cityscapes-769x769.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101b-d8_769x769_80k_cityscapes/deeplabv3_r101b-d8_769x769_80k_cityscapes_20201226_190843-9142ee57.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101b-d8_769x769_80k_cityscapes/deeplabv3_r101b-d8_769x769_80k_cityscapes-20201226_190843.log.json) |

+| Method | Backbone | Crop Size | Lr schd | Mem (GB) | Inf time (fps) | Device | mIoU | mIoU(ms+flip) | config | download |

+| ---------------- | --------------- | --------- | ------: | -------- | -------------- | ------ | ----: | ------------: | ------------------------------------------------------------------------------------------------------------------------------------------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ |

+| DeepLabV3 | R-50-D8 | 512x1024 | 40000 | 6.1 | 2.57 | V100 | 79.09 | 80.45 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r50-d8_4xb2-40k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x1024_40k_cityscapes/deeplabv3_r50-d8_512x1024_40k_cityscapes_20200605_022449-acadc2f8.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x1024_40k_cityscapes/deeplabv3_r50-d8_512x1024_40k_cityscapes_20200605_022449.log.json) |

+| DeepLabV3 | R-101-D8 | 512x1024 | 40000 | 9.6 | 1.92 | V100 | 77.12 | 79.61 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d8_4xb2-40k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x1024_40k_cityscapes/deeplabv3_r101-d8_512x1024_40k_cityscapes_20200605_012241-7fd3f799.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x1024_40k_cityscapes/deeplabv3_r101-d8_512x1024_40k_cityscapes_20200605_012241.log.json) |

+| DeepLabV3 | R-50-D8 | 769x769 | 40000 | 6.9 | 1.11 | V100 | 78.58 | 79.89 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r50-d8_4xb2-40k_cityscapes-769x769.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_769x769_40k_cityscapes/deeplabv3_r50-d8_769x769_40k_cityscapes_20200606_113723-7eda553c.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_769x769_40k_cityscapes/deeplabv3_r50-d8_769x769_40k_cityscapes_20200606_113723.log.json) |

+| DeepLabV3 | R-101-D8 | 769x769 | 40000 | 10.9 | 0.83 | V100 | 79.27 | 80.11 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d8_4xb2-40k_cityscapes-769x769.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_769x769_40k_cityscapes/deeplabv3_r101-d8_769x769_40k_cityscapes_20200606_113809-c64f889f.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_769x769_40k_cityscapes/deeplabv3_r101-d8_769x769_40k_cityscapes_20200606_113809.log.json) |

+| DeepLabV3 | R-18-D8 | 512x1024 | 80000 | 1.7 | 13.78 | V100 | 76.70 | 78.27 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r18-d8_4xb2-80k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18-d8_512x1024_80k_cityscapes/deeplabv3_r18-d8_512x1024_80k_cityscapes_20201225_021506-23dffbe2.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18-d8_512x1024_80k_cityscapes/deeplabv3_r18-d8_512x1024_80k_cityscapes-20201225_021506.log.json) |

+| DeepLabV3 | R-50-D8 | 512x1024 | 80000 | - | - | V100 | 79.32 | 80.57 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r50-d8_4xb2-80k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x1024_80k_cityscapes/deeplabv3_r50-d8_512x1024_80k_cityscapes_20200606_113404-b92cfdd4.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x1024_80k_cityscapes/deeplabv3_r50-d8_512x1024_80k_cityscapes_20200606_113404.log.json) |

+| DeepLabV3 | R-101-D8 | 512x1024 | 80000 | - | - | V100 | 80.20 | 81.21 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d8_4xb2-80k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x1024_80k_cityscapes/deeplabv3_r101-d8_512x1024_80k_cityscapes_20200606_113503-9e428899.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x1024_80k_cityscapes/deeplabv3_r101-d8_512x1024_80k_cityscapes_20200606_113503.log.json) |

+| DeepLabV3 (FP16) | R-101-D8 | 512x1024 | 80000 | 5.75 | 3.86 | V100 | 80.48 | - | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d8_4xb2-amp-80k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_fp16_512x1024_80k_cityscapes/deeplabv3_r101-d8_fp16_512x1024_80k_cityscapes_20200717_230920-774d9cec.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_fp16_512x1024_80k_cityscapes/deeplabv3_r101-d8_fp16_512x1024_80k_cityscapes_20200717_230920.log.json) |

+| DeepLabV3 | R-18-D8 | 769x769 | 80000 | 1.9 | 5.55 | V100 | 76.60 | 78.26 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r18-d8_4xb2-80k_cityscapes-769x769.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18-d8_769x769_80k_cityscapes/deeplabv3_r18-d8_769x769_80k_cityscapes_20201225_021506-6452126a.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18-d8_769x769_80k_cityscapes/deeplabv3_r18-d8_769x769_80k_cityscapes-20201225_021506.log.json) |

+| DeepLabV3 | R-50-D8 | 769x769 | 80000 | - | - | V100 | 79.89 | 81.06 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r50-d8_4xb2-80k_cityscapes-769x769.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_769x769_80k_cityscapes/deeplabv3_r50-d8_769x769_80k_cityscapes_20200606_221338-788d6228.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_769x769_80k_cityscapes/deeplabv3_r50-d8_769x769_80k_cityscapes_20200606_221338.log.json) |

+| DeepLabV3 | R-101-D8 | 769x769 | 80000 | - | - | V100 | 79.67 | 80.81 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d8_4xb2-80k_cityscapes-769x769.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_769x769_80k_cityscapes/deeplabv3_r101-d8_769x769_80k_cityscapes_20200607_013353-60e95418.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_769x769_80k_cityscapes/deeplabv3_r101-d8_769x769_80k_cityscapes_20200607_013353.log.json) |

+| DeepLabV3 | R-101-D16-MG124 | 512x1024 | 40000 | 4.7 | 6.96 | V100 | 76.71 | 78.63 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d16-mg124_4xb2-40k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d16-mg124_512x1024_40k_cityscapes/deeplabv3_r101-d16-mg124_512x1024_40k_cityscapes_20200908_005644-67b0c992.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d16-mg124_512x1024_40k_cityscapes/deeplabv3_r101-d16-mg124_512x1024_40k_cityscapes-20200908_005644.log.json) |

+| DeepLabV3 | R-101-D16-MG124 | 512x1024 | 80000 | - | - | V100 | 78.36 | 79.84 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d16-mg124_4xb2-80k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d16-mg124_512x1024_80k_cityscapes/deeplabv3_r101-d16-mg124_512x1024_80k_cityscapes_20200908_005644-57bb8425.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d16-mg124_512x1024_80k_cityscapes/deeplabv3_r101-d16-mg124_512x1024_80k_cityscapes-20200908_005644.log.json) |

+| DeepLabV3 | R-18b-D8 | 512x1024 | 80000 | 1.6 | 13.93 | V100 | 76.26 | 77.88 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r18b-d8_4xb2-80k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18b-d8_512x1024_80k_cityscapes/deeplabv3_r18b-d8_512x1024_80k_cityscapes_20201225_094144-46040cef.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18b-d8_512x1024_80k_cityscapes/deeplabv3_r18b-d8_512x1024_80k_cityscapes-20201225_094144.log.json) |

+| DeepLabV3 | R-50b-D8 | 512x1024 | 80000 | 6.0 | 2.74 | V100 | 79.63 | 80.98 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r50b-d8_4xb2-80k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50b-d8_512x1024_80k_cityscapes/deeplabv3_r50b-d8_512x1024_80k_cityscapes_20201225_155148-ec368954.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50b-d8_512x1024_80k_cityscapes/deeplabv3_r50b-d8_512x1024_80k_cityscapes-20201225_155148.log.json) |

+| DeepLabV3 | R-101b-D8 | 512x1024 | 80000 | 9.5 | 1.81 | V100 | 80.01 | 81.21 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101b-d8_4xb2-80k_cityscapes-512x1024.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101b-d8_512x1024_80k_cityscapes/deeplabv3_r101b-d8_512x1024_80k_cityscapes_20201226_171821-8fd49503.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101b-d8_512x1024_80k_cityscapes/deeplabv3_r101b-d8_512x1024_80k_cityscapes-20201226_171821.log.json) |

+| DeepLabV3 | R-18b-D8 | 769x769 | 80000 | 1.8 | 5.79 | V100 | 75.63 | 77.51 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r18b-d8_4xb2-80k_cityscapes-769x769.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18b-d8_769x769_80k_cityscapes/deeplabv3_r18b-d8_769x769_80k_cityscapes_20201225_094144-fdc985d9.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18b-d8_769x769_80k_cityscapes/deeplabv3_r18b-d8_769x769_80k_cityscapes-20201225_094144.log.json) |

+| DeepLabV3 | R-50b-D8 | 769x769 | 80000 | 6.8 | 1.16 | V100 | 78.80 | 80.27 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r50b-d8_4xb2-80k_cityscapes-769x769.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50b-d8_769x769_80k_cityscapes/deeplabv3_r50b-d8_769x769_80k_cityscapes_20201225_155404-87fb0cf4.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50b-d8_769x769_80k_cityscapes/deeplabv3_r50b-d8_769x769_80k_cityscapes-20201225_155404.log.json) |

+| DeepLabV3 | R-101b-D8 | 769x769 | 80000 | 10.7 | 0.82 | V100 | 79.41 | 80.73 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101b-d8_4xb2-80k_cityscapes-769x769.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101b-d8_769x769_80k_cityscapes/deeplabv3_r101b-d8_769x769_80k_cityscapes_20201226_190843-9142ee57.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101b-d8_769x769_80k_cityscapes/deeplabv3_r101b-d8_769x769_80k_cityscapes-20201226_190843.log.json) |

### ADE20K

-| Method | Backbone | Crop Size | Lr schd | Mem (GB) | Inf time (fps) | mIoU | mIoU(ms+flip) | config | download |

-| --------- | -------- | --------- | ------: | -------- | -------------- | ----: | ------------: | ----------------------------------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

-| DeepLabV3 | R-50-D8 | 512x512 | 80000 | 8.9 | 14.76 | 42.42 | 43.28 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r50-d8_4xb4-80k_ade20k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_80k_ade20k/deeplabv3_r50-d8_512x512_80k_ade20k_20200614_185028-0bb3f844.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_80k_ade20k/deeplabv3_r50-d8_512x512_80k_ade20k_20200614_185028.log.json) |

-| DeepLabV3 | R-101-D8 | 512x512 | 80000 | 12.4 | 10.14 | 44.08 | 45.19 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d8_4xb4-80k_ade20k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_80k_ade20k/deeplabv3_r101-d8_512x512_80k_ade20k_20200615_021256-d89c7fa4.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_80k_ade20k/deeplabv3_r101-d8_512x512_80k_ade20k_20200615_021256.log.json) |

-| DeepLabV3 | R-50-D8 | 512x512 | 160000 | - | - | 42.66 | 44.09 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r50-d8_4xb4-160k_ade20k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_160k_ade20k/deeplabv3_r50-d8_512x512_160k_ade20k_20200615_123227-5d0ee427.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_160k_ade20k/deeplabv3_r50-d8_512x512_160k_ade20k_20200615_123227.log.json) |

-| DeepLabV3 | R-101-D8 | 512x512 | 160000 | - | - | 45.00 | 46.66 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d8_4xb4-160k_ade20k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_160k_ade20k/deeplabv3_r101-d8_512x512_160k_ade20k_20200615_105816-b1f72b3b.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_160k_ade20k/deeplabv3_r101-d8_512x512_160k_ade20k_20200615_105816.log.json) |

+| Method | Backbone | Crop Size | Lr schd | Mem (GB) | Inf time (fps) | Device | mIoU | mIoU(ms+flip) | config | download |

+| --------- | -------- | --------- | ------: | -------- | -------------- | ------ | ----: | ------------: | -------------------------------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

+| DeepLabV3 | R-50-D8 | 512x512 | 80000 | 8.9 | 14.76 | V100 | 42.42 | 43.28 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r50-d8_4xb4-80k_ade20k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_80k_ade20k/deeplabv3_r50-d8_512x512_80k_ade20k_20200614_185028-0bb3f844.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_80k_ade20k/deeplabv3_r50-d8_512x512_80k_ade20k_20200614_185028.log.json) |

+| DeepLabV3 | R-101-D8 | 512x512 | 80000 | 12.4 | 10.14 | V100 | 44.08 | 45.19 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d8_4xb4-80k_ade20k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_80k_ade20k/deeplabv3_r101-d8_512x512_80k_ade20k_20200615_021256-d89c7fa4.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_80k_ade20k/deeplabv3_r101-d8_512x512_80k_ade20k_20200615_021256.log.json) |

+| DeepLabV3 | R-50-D8 | 512x512 | 160000 | - | - | V100 | 42.66 | 44.09 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r50-d8_4xb4-160k_ade20k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_160k_ade20k/deeplabv3_r50-d8_512x512_160k_ade20k_20200615_123227-5d0ee427.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_160k_ade20k/deeplabv3_r50-d8_512x512_160k_ade20k_20200615_123227.log.json) |

+| DeepLabV3 | R-101-D8 | 512x512 | 160000 | - | - | V100 | 45.00 | 46.66 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d8_4xb4-160k_ade20k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_160k_ade20k/deeplabv3_r101-d8_512x512_160k_ade20k_20200615_105816-b1f72b3b.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_160k_ade20k/deeplabv3_r101-d8_512x512_160k_ade20k_20200615_105816.log.json) |

### Pascal VOC 2012 + Aug

-| Method | Backbone | Crop Size | Lr schd | Mem (GB) | Inf time (fps) | mIoU | mIoU(ms+flip) | config | download |

-| --------- | -------- | --------- | ------: | -------- | -------------- | ----: | ------------: | ------------------------------------------------------------------------------------------------------------------------------------ | -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

-| DeepLabV3 | R-50-D8 | 512x512 | 20000 | 6.1 | 13.88 | 76.17 | 77.42 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r50-d8_4xb4-20k_voc12aug-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_20k_voc12aug/deeplabv3_r50-d8_512x512_20k_voc12aug_20200617_010906-596905ef.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_20k_voc12aug/deeplabv3_r50-d8_512x512_20k_voc12aug_20200617_010906.log.json) |

-| DeepLabV3 | R-101-D8 | 512x512 | 20000 | 9.6 | 9.81 | 78.70 | 79.95 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d8_4xb4-20k_voc12aug-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_20k_voc12aug/deeplabv3_r101-d8_512x512_20k_voc12aug_20200617_010932-8d13832f.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_20k_voc12aug/deeplabv3_r101-d8_512x512_20k_voc12aug_20200617_010932.log.json) |

-| DeepLabV3 | R-50-D8 | 512x512 | 40000 | - | - | 77.68 | 78.78 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r50-d8_4xb4-40k_voc12aug-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_40k_voc12aug/deeplabv3_r50-d8_512x512_40k_voc12aug_20200613_161546-2ae96e7e.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_40k_voc12aug/deeplabv3_r50-d8_512x512_40k_voc12aug_20200613_161546.log.json) |

-| DeepLabV3 | R-101-D8 | 512x512 | 40000 | - | - | 77.92 | 79.18 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d8_4xb4-40k_voc12aug-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_40k_voc12aug/deeplabv3_r101-d8_512x512_40k_voc12aug_20200613_161432-0017d784.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_40k_voc12aug/deeplabv3_r101-d8_512x512_40k_voc12aug_20200613_161432.log.json) |

+| Method | Backbone | Crop Size | Lr schd | Mem (GB) | Inf time (fps) | Device | mIoU | mIoU(ms+flip) | config | download |

+| --------- | -------- | --------- | ------: | -------- | -------------- | ------ | ----: | ------------: | --------------------------------------------------------------------------------------------------------------------------------- | -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

+| DeepLabV3 | R-50-D8 | 512x512 | 20000 | 6.1 | 13.88 | V100 | 76.17 | 77.42 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r50-d8_4xb4-20k_voc12aug-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_20k_voc12aug/deeplabv3_r50-d8_512x512_20k_voc12aug_20200617_010906-596905ef.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_20k_voc12aug/deeplabv3_r50-d8_512x512_20k_voc12aug_20200617_010906.log.json) |

+| DeepLabV3 | R-101-D8 | 512x512 | 20000 | 9.6 | 9.81 | V100 | 78.70 | 79.95 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d8_4xb4-20k_voc12aug-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_20k_voc12aug/deeplabv3_r101-d8_512x512_20k_voc12aug_20200617_010932-8d13832f.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_20k_voc12aug/deeplabv3_r101-d8_512x512_20k_voc12aug_20200617_010932.log.json) |

+| DeepLabV3 | R-50-D8 | 512x512 | 40000 | - | - | V100 | 77.68 | 78.78 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r50-d8_4xb4-40k_voc12aug-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_40k_voc12aug/deeplabv3_r50-d8_512x512_40k_voc12aug_20200613_161546-2ae96e7e.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_40k_voc12aug/deeplabv3_r50-d8_512x512_40k_voc12aug_20200613_161546.log.json) |

+| DeepLabV3 | R-101-D8 | 512x512 | 40000 | - | - | V100 | 77.92 | 79.18 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d8_4xb4-40k_voc12aug-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_40k_voc12aug/deeplabv3_r101-d8_512x512_40k_voc12aug_20200613_161432-0017d784.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_40k_voc12aug/deeplabv3_r101-d8_512x512_40k_voc12aug_20200613_161432.log.json) |

### Pascal Context

-| Method | Backbone | Crop Size | Lr schd | Mem (GB) | Inf time (fps) | mIoU | mIoU(ms+flip) | config | download |

-| --------- | -------- | --------- | ------: | -------- | -------------- | ----: | ------------: | ------------------------------------------------------------------------------------------------------------------------------------------ | -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

-| DeepLabV3 | R-101-D8 | 480x480 | 40000 | 9.2 | 7.09 | 46.55 | 47.81 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d8_4xb4-40k_pascal-context-480x480.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_480x480_40k_pascal_context/deeplabv3_r101-d8_480x480_40k_pascal_context_20200911_204118-1aa27336.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_480x480_40k_pascal_context/deeplabv3_r101-d8_480x480_40k_pascal_context-20200911_204118.log.json) |

-| DeepLabV3 | R-101-D8 | 480x480 | 80000 | - | - | 46.42 | 47.53 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d8_4xb4-80k_pascal-context-480x480.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_480x480_80k_pascal_context/deeplabv3_r101-d8_480x480_80k_pascal_context_20200911_170155-2a21fff3.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_480x480_80k_pascal_context/deeplabv3_r101-d8_480x480_80k_pascal_context-20200911_170155.log.json) |

+| Method | Backbone | Crop Size | Lr schd | Mem (GB) | Inf time (fps) | Device | mIoU | mIoU(ms+flip) | config | download |

+| --------- | -------- | --------- | ------: | -------- | -------------- | ------ | ----: | ------------: | --------------------------------------------------------------------------------------------------------------------------------------- | -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

+| DeepLabV3 | R-101-D8 | 480x480 | 40000 | 9.2 | 7.09 | V100 | 46.55 | 47.81 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d8_4xb4-40k_pascal-context-480x480.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_480x480_40k_pascal_context/deeplabv3_r101-d8_480x480_40k_pascal_context_20200911_204118-1aa27336.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_480x480_40k_pascal_context/deeplabv3_r101-d8_480x480_40k_pascal_context-20200911_204118.log.json) |

+| DeepLabV3 | R-101-D8 | 480x480 | 80000 | - | - | V100 | 46.42 | 47.53 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d8_4xb4-80k_pascal-context-480x480.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_480x480_80k_pascal_context/deeplabv3_r101-d8_480x480_80k_pascal_context_20200911_170155-2a21fff3.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_480x480_80k_pascal_context/deeplabv3_r101-d8_480x480_80k_pascal_context-20200911_170155.log.json) |

### Pascal Context 59

-| Method | Backbone | Crop Size | Lr schd | Mem (GB) | Inf time (fps) | mIoU | mIoU(ms+flip) | config | download |

-| --------- | -------- | --------- | ------: | -------- | -------------- | ----: | ------------: | --------------------------------------------------------------------------------------------------------------------------------------------- | -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

-| DeepLabV3 | R-101-D8 | 480x480 | 40000 | - | - | 52.61 | 54.28 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d8_4xb4-40k_pascal-context-59-480x480.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_480x480_40k_pascal_context_59/deeplabv3_r101-d8_480x480_40k_pascal_context_59_20210416_110332-cb08ea46.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_480x480_40k_pascal_context_59/deeplabv3_r101-d8_480x480_40k_pascal_context_59-20210416_110332.log.json) |

-| DeepLabV3 | R-101-D8 | 480x480 | 80000 | - | - | 52.46 | 54.09 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d8_4xb4-80k_pascal-context-59-480x480.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_480x480_80k_pascal_context_59/deeplabv3_r101-d8_480x480_80k_pascal_context_59_20210416_113002-26303993.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_480x480_80k_pascal_context_59/deeplabv3_r101-d8_480x480_80k_pascal_context_59-20210416_113002.log.json) |

+| Method | Backbone | Crop Size | Lr schd | Mem (GB) | Inf time (fps) | Device | mIoU | mIoU(ms+flip) | config | download |

+| --------- | -------- | --------- | ------: | -------- | -------------- | ------ | ----: | ------------: | ------------------------------------------------------------------------------------------------------------------------------------------ | -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

+| DeepLabV3 | R-101-D8 | 480x480 | 40000 | - | - | V100 | 52.61 | 54.28 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d8_4xb4-40k_pascal-context-59-480x480.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_480x480_40k_pascal_context_59/deeplabv3_r101-d8_480x480_40k_pascal_context_59_20210416_110332-cb08ea46.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_480x480_40k_pascal_context_59/deeplabv3_r101-d8_480x480_40k_pascal_context_59-20210416_110332.log.json) |

+| DeepLabV3 | R-101-D8 | 480x480 | 80000 | - | - | V100 | 52.46 | 54.09 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d8_4xb4-80k_pascal-context-59-480x480.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_480x480_80k_pascal_context_59/deeplabv3_r101-d8_480x480_80k_pascal_context_59_20210416_113002-26303993.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_480x480_80k_pascal_context_59/deeplabv3_r101-d8_480x480_80k_pascal_context_59-20210416_113002.log.json) |

### COCO-Stuff 10k

-| Method | Backbone | Crop Size | Lr schd | Mem (GB) | Inf time (fps) | mIoU | mIoU(ms+flip) | config | download |

-| --------- | -------- | --------- | ------: | -------- | -------------- | ----: | ------------: | ----------------------------------------------------------------------------------------------------------------------------------------- | -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

-| DeepLabV3 | R-50-D8 | 512x512 | 20000 | 9.6 | 10.8 | 34.66 | 36.08 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r50-d8_4xb4-20k_coco-stuff10k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_20k_coco-stuff10k/deeplabv3_r50-d8_512x512_4x4_20k_coco-stuff10k_20210821_043025-b35f789d.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_20k_coco-stuff10k/deeplabv3_r50-d8_512x512_4x4_20k_coco-stuff10k_20210821_043025.log.json) |

-| DeepLabV3 | R-101-D8 | 512x512 | 20000 | 13.2 | 8.7 | 37.30 | 38.42 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d8_4xb4-20k_coco-stuff10k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_20k_coco-stuff10k/deeplabv3_r101-d8_512x512_4x4_20k_coco-stuff10k_20210821_043025-c49752cb.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_20k_coco-stuff10k/deeplabv3_r101-d8_512x512_4x4_20k_coco-stuff10k_20210821_043025.log.json) |

-| DeepLabV3 | R-50-D8 | 512x512 | 40000 | - | - | 35.73 | 37.09 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r50-d8_4xb4-40k_coco-stuff10k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_40k_coco-stuff10k/deeplabv3_r50-d8_512x512_4x4_40k_coco-stuff10k_20210821_043305-dc76f3ff.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_40k_coco-stuff10k/deeplabv3_r50-d8_512x512_4x4_40k_coco-stuff10k_20210821_043305.log.json) |

-| DeepLabV3 | R-101-D8 | 512x512 | 40000 | - | - | 37.81 | 38.80 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d8_4xb4-40k_coco-stuff10k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_40k_coco-stuff10k/deeplabv3_r101-d8_512x512_4x4_40k_coco-stuff10k_20210821_043305-636cb433.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_40k_coco-stuff10k/deeplabv3_r101-d8_512x512_4x4_40k_coco-stuff10k_20210821_043305.log.json) |

+| Method | Backbone | Crop Size | Lr schd | Mem (GB) | Inf time (fps) | Device | mIoU | mIoU(ms+flip) | config | download |

+| --------- | -------- | --------- | ------: | -------- | -------------- | ------ | ----: | ------------: | -------------------------------------------------------------------------------------------------------------------------------------- | -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

+| DeepLabV3 | R-50-D8 | 512x512 | 20000 | 9.6 | 10.8 | V100 | 34.66 | 36.08 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r50-d8_4xb4-20k_coco-stuff10k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_20k_coco-stuff10k/deeplabv3_r50-d8_512x512_4x4_20k_coco-stuff10k_20210821_043025-b35f789d.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_20k_coco-stuff10k/deeplabv3_r50-d8_512x512_4x4_20k_coco-stuff10k_20210821_043025.log.json) |

+| DeepLabV3 | R-101-D8 | 512x512 | 20000 | 13.2 | 8.7 | V100 | 37.30 | 38.42 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d8_4xb4-20k_coco-stuff10k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_20k_coco-stuff10k/deeplabv3_r101-d8_512x512_4x4_20k_coco-stuff10k_20210821_043025-c49752cb.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_20k_coco-stuff10k/deeplabv3_r101-d8_512x512_4x4_20k_coco-stuff10k_20210821_043025.log.json) |

+| DeepLabV3 | R-50-D8 | 512x512 | 40000 | - | - | V100 | 35.73 | 37.09 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r50-d8_4xb4-40k_coco-stuff10k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_40k_coco-stuff10k/deeplabv3_r50-d8_512x512_4x4_40k_coco-stuff10k_20210821_043305-dc76f3ff.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_40k_coco-stuff10k/deeplabv3_r50-d8_512x512_4x4_40k_coco-stuff10k_20210821_043305.log.json) |

+| DeepLabV3 | R-101-D8 | 512x512 | 40000 | - | - | V100 | 37.81 | 38.80 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d8_4xb4-40k_coco-stuff10k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_40k_coco-stuff10k/deeplabv3_r101-d8_512x512_4x4_40k_coco-stuff10k_20210821_043305-636cb433.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_40k_coco-stuff10k/deeplabv3_r101-d8_512x512_4x4_40k_coco-stuff10k_20210821_043305.log.json) |

### COCO-Stuff 164k

-| Method | Backbone | Crop Size | Lr schd | Mem (GB) | Inf time (fps) | mIoU | mIoU(ms+flip) | config | download |

-| --------- | -------- | --------- | ------: | -------- | -------------- | ----: | ------------: | ------------------------------------------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

-| DeepLabV3 | R-50-D8 | 512x512 | 80000 | 9.6 | 10.8 | 39.38 | 40.03 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r50-d8_4xb4-80k_coco-stuff164k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_80k_coco-stuff164k/deeplabv3_r50-d8_512x512_4x4_80k_coco-stuff164k_20210709_163016-88675c24.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_80k_coco-stuff164k/deeplabv3_r50-d8_512x512_4x4_80k_coco-stuff164k_20210709_163016.log.json) |

-| DeepLabV3 | R-101-D8 | 512x512 | 80000 | 13.2 | 8.7 | 40.87 | 41.50 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d8_4xb4-80k_coco-stuff164k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_80k_coco-stuff164k/deeplabv3_r101-d8_512x512_4x4_80k_coco-stuff164k_20210709_201252-13600dc2.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_80k_coco-stuff164k/deeplabv3_r101-d8_512x512_4x4_80k_coco-stuff164k_20210709_201252.log.json) |

-| DeepLabV3 | R-50-D8 | 512x512 | 160000 | - | - | 41.09 | 41.69 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r50-d8_4xb4-160k_coco-stuff164k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_160k_coco-stuff164k/deeplabv3_r50-d8_512x512_4x4_160k_coco-stuff164k_20210709_163016-49f2812b.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_160k_coco-stuff164k/deeplabv3_r50-d8_512x512_4x4_160k_coco-stuff164k_20210709_163016.log.json) |

-| DeepLabV3 | R-101-D8 | 512x512 | 160000 | - | - | 41.82 | 42.49 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d8_4xb4-160k_coco-stuff164k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_160k_coco-stuff164k/deeplabv3_r101-d8_512x512_4x4_160k_coco-stuff164k_20210709_155402-f035acfd.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_160k_coco-stuff164k/deeplabv3_r101-d8_512x512_4x4_160k_coco-stuff164k_20210709_155402.log.json) |

-| DeepLabV3 | R-50-D8 | 512x512 | 320000 | - | - | 41.37 | 42.22 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r50-d8_4xb4-320k_coco-stuff164k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_320k_coco-stuff164k/deeplabv3_r50-d8_512x512_4x4_320k_coco-stuff164k_20210709_155403-51b21115.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_320k_coco-stuff164k/deeplabv3_r50-d8_512x512_4x4_320k_coco-stuff164k_20210709_155403.log.json) |

-| DeepLabV3 | R-101-D8 | 512x512 | 320000 | - | - | 42.61 | 43.42 | [config](https://github.com/open-mmlab/mmsegmentation/blob/dev-1.x/configs/deeplabv3/deeplabv3_r101-d8_4xb4-320k_coco-stuff164k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_320k_coco-stuff164k/deeplabv3_r101-d8_512x512_4x4_320k_coco-stuff164k_20210709_155402-3cbca14d.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_320k_coco-stuff164k/deeplabv3_r101-d8_512x512_4x4_320k_coco-stuff164k_20210709_155402.log.json) |

+| Method | Backbone | Crop Size | Lr schd | Mem (GB) | Inf time (fps) | Device | mIoU | mIoU(ms+flip) | config | download |

+| --------- | -------- | --------- | ------: | -------- | -------------- | ------ | ----: | ------------: | ---------------------------------------------------------------------------------------------------------------------------------------- | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

+| DeepLabV3 | R-50-D8 | 512x512 | 80000 | 9.6 | 10.8 | V100 | 39.38 | 40.03 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r50-d8_4xb4-80k_coco-stuff164k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_80k_coco-stuff164k/deeplabv3_r50-d8_512x512_4x4_80k_coco-stuff164k_20210709_163016-88675c24.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_80k_coco-stuff164k/deeplabv3_r50-d8_512x512_4x4_80k_coco-stuff164k_20210709_163016.log.json) |

+| DeepLabV3 | R-101-D8 | 512x512 | 80000 | 13.2 | 8.7 | V100 | 40.87 | 41.50 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d8_4xb4-80k_coco-stuff164k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_80k_coco-stuff164k/deeplabv3_r101-d8_512x512_4x4_80k_coco-stuff164k_20210709_201252-13600dc2.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_80k_coco-stuff164k/deeplabv3_r101-d8_512x512_4x4_80k_coco-stuff164k_20210709_201252.log.json) |

+| DeepLabV3 | R-50-D8 | 512x512 | 160000 | - | - | V100 | 41.09 | 41.69 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r50-d8_4xb4-160k_coco-stuff164k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_160k_coco-stuff164k/deeplabv3_r50-d8_512x512_4x4_160k_coco-stuff164k_20210709_163016-49f2812b.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_160k_coco-stuff164k/deeplabv3_r50-d8_512x512_4x4_160k_coco-stuff164k_20210709_163016.log.json) |

+| DeepLabV3 | R-101-D8 | 512x512 | 160000 | - | - | V100 | 41.82 | 42.49 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d8_4xb4-160k_coco-stuff164k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_160k_coco-stuff164k/deeplabv3_r101-d8_512x512_4x4_160k_coco-stuff164k_20210709_155402-f035acfd.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_160k_coco-stuff164k/deeplabv3_r101-d8_512x512_4x4_160k_coco-stuff164k_20210709_155402.log.json) |

+| DeepLabV3 | R-50-D8 | 512x512 | 320000 | - | - | V100 | 41.37 | 42.22 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r50-d8_4xb4-320k_coco-stuff164k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_320k_coco-stuff164k/deeplabv3_r50-d8_512x512_4x4_320k_coco-stuff164k_20210709_155403-51b21115.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_4x4_320k_coco-stuff164k/deeplabv3_r50-d8_512x512_4x4_320k_coco-stuff164k_20210709_155403.log.json) |

+| DeepLabV3 | R-101-D8 | 512x512 | 320000 | - | - | V100 | 42.61 | 43.42 | [config](https://github.com/open-mmlab/mmsegmentation/blob/main/configs/deeplabv3/deeplabv3_r101-d8_4xb4-320k_coco-stuff164k-512x512.py) | [model](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_320k_coco-stuff164k/deeplabv3_r101-d8_512x512_4x4_320k_coco-stuff164k_20210709_155402-3cbca14d.pth) \| [log](https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_4x4_320k_coco-stuff164k/deeplabv3_r101-d8_512x512_4x4_320k_coco-stuff164k_20210709_155402.log.json) |

Note:

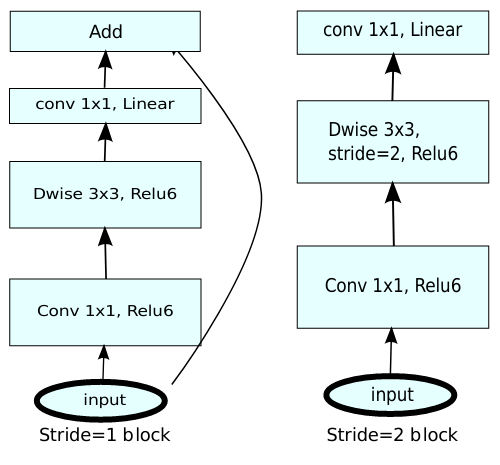

- `D-8` here corresponding to the output stride 8 setting for DeepLab series.

- `FP16` means Mixed Precision (FP16) is adopted in training.

+

+## Citation

+

+```bibtext

+@article{chen2017rethinking,

+ title={Rethinking atrous convolution for semantic image segmentation},

+ author={Chen, Liang-Chieh and Papandreou, George and Schroff, Florian and Adam, Hartwig},

+ journal={arXiv preprint arXiv:1706.05587},

+ year={2017}

+}

+```

diff --git a/configs/deeplabv3/deeplabv3.yml b/configs/deeplabv3/deeplabv3.yml

deleted file mode 100644

index 6196212992..0000000000

--- a/configs/deeplabv3/deeplabv3.yml

+++ /dev/null

@@ -1,756 +0,0 @@

-Collections:

-- Name: DeepLabV3

- Metadata:

- Training Data:

- - Cityscapes

- - ADE20K

- - Pascal VOC 2012 + Aug

- - Pascal Context

- - Pascal Context 59

- - COCO-Stuff 10k

- - COCO-Stuff 164k

- Paper:

- URL: https://arxiv.org/abs/1706.05587

- Title: Rethinking atrous convolution for semantic image segmentation

- README: configs/deeplabv3/README.md

- Code:

- URL: https://github.com/open-mmlab/mmsegmentation/blob/v0.17.0/mmseg/models/decode_heads/aspp_head.py#L54

- Version: v0.17.0

- Converted From:

- Code: https://github.com/tensorflow/models/tree/master/research/deeplab

-Models:

-- Name: deeplabv3_r50-d8_4xb2-40k_cityscapes-512x1024

- In Collection: DeepLabV3

- Metadata:

- backbone: R-50-D8

- crop size: (512,1024)

- lr schd: 40000

- inference time (ms/im):

- - value: 389.11

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (512,1024)

- Training Memory (GB): 6.1

- Results:

- - Task: Semantic Segmentation

- Dataset: Cityscapes

- Metrics:

- mIoU: 79.09

- mIoU(ms+flip): 80.45

- Config: configs/deeplabv3/deeplabv3_r50-d8_4xb2-40k_cityscapes-512x1024.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x1024_40k_cityscapes/deeplabv3_r50-d8_512x1024_40k_cityscapes_20200605_022449-acadc2f8.pth

-- Name: deeplabv3_r101-d8_4xb2-40k_cityscapes-512x1024

- In Collection: DeepLabV3

- Metadata:

- backbone: R-101-D8

- crop size: (512,1024)

- lr schd: 40000

- inference time (ms/im):

- - value: 520.83

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (512,1024)

- Training Memory (GB): 9.6

- Results:

- - Task: Semantic Segmentation

- Dataset: Cityscapes

- Metrics:

- mIoU: 77.12

- mIoU(ms+flip): 79.61

- Config: configs/deeplabv3/deeplabv3_r101-d8_4xb2-40k_cityscapes-512x1024.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x1024_40k_cityscapes/deeplabv3_r101-d8_512x1024_40k_cityscapes_20200605_012241-7fd3f799.pth

-- Name: deeplabv3_r50-d8_4xb2-40k_cityscapes-769x769

- In Collection: DeepLabV3

- Metadata:

- backbone: R-50-D8

- crop size: (769,769)

- lr schd: 40000

- inference time (ms/im):

- - value: 900.9

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (769,769)

- Training Memory (GB): 6.9

- Results:

- - Task: Semantic Segmentation

- Dataset: Cityscapes

- Metrics:

- mIoU: 78.58

- mIoU(ms+flip): 79.89

- Config: configs/deeplabv3/deeplabv3_r50-d8_4xb2-40k_cityscapes-769x769.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_769x769_40k_cityscapes/deeplabv3_r50-d8_769x769_40k_cityscapes_20200606_113723-7eda553c.pth

-- Name: deeplabv3_r101-d8_4xb2-40k_cityscapes-769x769

- In Collection: DeepLabV3

- Metadata:

- backbone: R-101-D8

- crop size: (769,769)

- lr schd: 40000

- inference time (ms/im):

- - value: 1204.82

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (769,769)

- Training Memory (GB): 10.9

- Results:

- - Task: Semantic Segmentation

- Dataset: Cityscapes

- Metrics:

- mIoU: 79.27

- mIoU(ms+flip): 80.11

- Config: configs/deeplabv3/deeplabv3_r101-d8_4xb2-40k_cityscapes-769x769.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_769x769_40k_cityscapes/deeplabv3_r101-d8_769x769_40k_cityscapes_20200606_113809-c64f889f.pth

-- Name: deeplabv3_r18-d8_4xb2-80k_cityscapes-512x1024

- In Collection: DeepLabV3

- Metadata:

- backbone: R-18-D8

- crop size: (512,1024)

- lr schd: 80000

- inference time (ms/im):

- - value: 72.57

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (512,1024)

- Training Memory (GB): 1.7

- Results:

- - Task: Semantic Segmentation

- Dataset: Cityscapes

- Metrics:

- mIoU: 76.7

- mIoU(ms+flip): 78.27

- Config: configs/deeplabv3/deeplabv3_r18-d8_4xb2-80k_cityscapes-512x1024.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18-d8_512x1024_80k_cityscapes/deeplabv3_r18-d8_512x1024_80k_cityscapes_20201225_021506-23dffbe2.pth

-- Name: deeplabv3_r50-d8_4xb2-80k_cityscapes-512x1024

- In Collection: DeepLabV3

- Metadata:

- backbone: R-50-D8

- crop size: (512,1024)

- lr schd: 80000

- Results:

- - Task: Semantic Segmentation

- Dataset: Cityscapes

- Metrics:

- mIoU: 79.32

- mIoU(ms+flip): 80.57

- Config: configs/deeplabv3/deeplabv3_r50-d8_4xb2-80k_cityscapes-512x1024.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x1024_80k_cityscapes/deeplabv3_r50-d8_512x1024_80k_cityscapes_20200606_113404-b92cfdd4.pth

-- Name: deeplabv3_r101-d8_4xb2-80k_cityscapes-512x1024

- In Collection: DeepLabV3

- Metadata:

- backbone: R-101-D8

- crop size: (512,1024)

- lr schd: 80000

- Results:

- - Task: Semantic Segmentation

- Dataset: Cityscapes

- Metrics:

- mIoU: 80.2

- mIoU(ms+flip): 81.21

- Config: configs/deeplabv3/deeplabv3_r101-d8_4xb2-80k_cityscapes-512x1024.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x1024_80k_cityscapes/deeplabv3_r101-d8_512x1024_80k_cityscapes_20200606_113503-9e428899.pth

-- Name: deeplabv3_r101-d8_4xb2-amp-80k_cityscapes-512x1024

- In Collection: DeepLabV3

- Metadata:

- backbone: R-101-D8

- crop size: (512,1024)

- lr schd: 80000

- inference time (ms/im):

- - value: 259.07

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: AMP

- resolution: (512,1024)

- Training Memory (GB): 5.75

- Results:

- - Task: Semantic Segmentation

- Dataset: Cityscapes

- Metrics:

- mIoU: 80.48

- Config: configs/deeplabv3/deeplabv3_r101-d8_4xb2-amp-80k_cityscapes-512x1024.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_fp16_512x1024_80k_cityscapes/deeplabv3_r101-d8_fp16_512x1024_80k_cityscapes_20200717_230920-774d9cec.pth

-- Name: deeplabv3_r18-d8_4xb2-80k_cityscapes-769x769

- In Collection: DeepLabV3

- Metadata:

- backbone: R-18-D8

- crop size: (769,769)

- lr schd: 80000

- inference time (ms/im):

- - value: 180.18

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (769,769)

- Training Memory (GB): 1.9

- Results:

- - Task: Semantic Segmentation

- Dataset: Cityscapes

- Metrics:

- mIoU: 76.6

- mIoU(ms+flip): 78.26

- Config: configs/deeplabv3/deeplabv3_r18-d8_4xb2-80k_cityscapes-769x769.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18-d8_769x769_80k_cityscapes/deeplabv3_r18-d8_769x769_80k_cityscapes_20201225_021506-6452126a.pth

-- Name: deeplabv3_r50-d8_4xb2-80k_cityscapes-769x769

- In Collection: DeepLabV3

- Metadata:

- backbone: R-50-D8

- crop size: (769,769)

- lr schd: 80000

- Results:

- - Task: Semantic Segmentation

- Dataset: Cityscapes

- Metrics:

- mIoU: 79.89

- mIoU(ms+flip): 81.06

- Config: configs/deeplabv3/deeplabv3_r50-d8_4xb2-80k_cityscapes-769x769.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_769x769_80k_cityscapes/deeplabv3_r50-d8_769x769_80k_cityscapes_20200606_221338-788d6228.pth

-- Name: deeplabv3_r101-d8_4xb2-80k_cityscapes-769x769

- In Collection: DeepLabV3

- Metadata:

- backbone: R-101-D8

- crop size: (769,769)

- lr schd: 80000

- Results:

- - Task: Semantic Segmentation

- Dataset: Cityscapes

- Metrics:

- mIoU: 79.67

- mIoU(ms+flip): 80.81

- Config: configs/deeplabv3/deeplabv3_r101-d8_4xb2-80k_cityscapes-769x769.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_769x769_80k_cityscapes/deeplabv3_r101-d8_769x769_80k_cityscapes_20200607_013353-60e95418.pth

-- Name: deeplabv3_r101-d16-mg124_4xb2-80k_cityscapes-512x1024

- In Collection: DeepLabV3

- Metadata:

- backbone: R-101-D16-MG124

- crop size: (512,1024)

- lr schd: 80000

- Results:

- - Task: Semantic Segmentation

- Dataset: Cityscapes

- Metrics:

- mIoU: 78.36

- mIoU(ms+flip): 79.84

- Config: configs/deeplabv3/deeplabv3_r101-d16-mg124_4xb2-80k_cityscapes-512x1024.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d16-mg124_512x1024_80k_cityscapes/deeplabv3_r101-d16-mg124_512x1024_80k_cityscapes_20200908_005644-57bb8425.pth

-- Name: deeplabv3_r18b-d8_4xb2-80k_cityscapes-512x1024

- In Collection: DeepLabV3

- Metadata:

- backbone: R-18b-D8

- crop size: (512,1024)

- lr schd: 80000

- inference time (ms/im):

- - value: 71.79

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (512,1024)

- Training Memory (GB): 1.6

- Results:

- - Task: Semantic Segmentation

- Dataset: Cityscapes

- Metrics:

- mIoU: 76.26

- mIoU(ms+flip): 77.88

- Config: configs/deeplabv3/deeplabv3_r18b-d8_4xb2-80k_cityscapes-512x1024.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18b-d8_512x1024_80k_cityscapes/deeplabv3_r18b-d8_512x1024_80k_cityscapes_20201225_094144-46040cef.pth

-- Name: deeplabv3_r50b-d8_4xb2-80k_cityscapes-512x1024

- In Collection: DeepLabV3

- Metadata:

- backbone: R-50b-D8

- crop size: (512,1024)

- lr schd: 80000

- inference time (ms/im):

- - value: 364.96

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (512,1024)

- Training Memory (GB): 6.0

- Results:

- - Task: Semantic Segmentation

- Dataset: Cityscapes

- Metrics:

- mIoU: 79.63

- mIoU(ms+flip): 80.98

- Config: configs/deeplabv3/deeplabv3_r50b-d8_4xb2-80k_cityscapes-512x1024.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50b-d8_512x1024_80k_cityscapes/deeplabv3_r50b-d8_512x1024_80k_cityscapes_20201225_155148-ec368954.pth

-- Name: deeplabv3_r101b-d8_4xb2-80k_cityscapes-512x1024

- In Collection: DeepLabV3

- Metadata:

- backbone: R-101b-D8

- crop size: (512,1024)

- lr schd: 80000

- inference time (ms/im):

- - value: 552.49

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (512,1024)

- Training Memory (GB): 9.5

- Results:

- - Task: Semantic Segmentation

- Dataset: Cityscapes

- Metrics:

- mIoU: 80.01

- mIoU(ms+flip): 81.21

- Config: configs/deeplabv3/deeplabv3_r101b-d8_4xb2-80k_cityscapes-512x1024.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101b-d8_512x1024_80k_cityscapes/deeplabv3_r101b-d8_512x1024_80k_cityscapes_20201226_171821-8fd49503.pth

-- Name: deeplabv3_r18b-d8_4xb2-80k_cityscapes-769x769

- In Collection: DeepLabV3

- Metadata:

- backbone: R-18b-D8

- crop size: (769,769)

- lr schd: 80000

- inference time (ms/im):

- - value: 172.71

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (769,769)

- Training Memory (GB): 1.8

- Results:

- - Task: Semantic Segmentation

- Dataset: Cityscapes

- Metrics:

- mIoU: 75.63

- mIoU(ms+flip): 77.51

- Config: configs/deeplabv3/deeplabv3_r18b-d8_4xb2-80k_cityscapes-769x769.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r18b-d8_769x769_80k_cityscapes/deeplabv3_r18b-d8_769x769_80k_cityscapes_20201225_094144-fdc985d9.pth

-- Name: deeplabv3_r50b-d8_4xb2-80k_cityscapes-769x769

- In Collection: DeepLabV3

- Metadata:

- backbone: R-50b-D8

- crop size: (769,769)

- lr schd: 80000

- inference time (ms/im):

- - value: 862.07

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (769,769)

- Training Memory (GB): 6.8

- Results:

- - Task: Semantic Segmentation

- Dataset: Cityscapes

- Metrics:

- mIoU: 78.8

- mIoU(ms+flip): 80.27

- Config: configs/deeplabv3/deeplabv3_r50b-d8_4xb2-80k_cityscapes-769x769.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50b-d8_769x769_80k_cityscapes/deeplabv3_r50b-d8_769x769_80k_cityscapes_20201225_155404-87fb0cf4.pth

-- Name: deeplabv3_r101b-d8_4xb2-80k_cityscapes-769x769

- In Collection: DeepLabV3

- Metadata:

- backbone: R-101b-D8

- crop size: (769,769)

- lr schd: 80000

- inference time (ms/im):

- - value: 1219.51

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (769,769)

- Training Memory (GB): 10.7

- Results:

- - Task: Semantic Segmentation

- Dataset: Cityscapes

- Metrics:

- mIoU: 79.41

- mIoU(ms+flip): 80.73

- Config: configs/deeplabv3/deeplabv3_r101b-d8_4xb2-80k_cityscapes-769x769.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101b-d8_769x769_80k_cityscapes/deeplabv3_r101b-d8_769x769_80k_cityscapes_20201226_190843-9142ee57.pth

-- Name: deeplabv3_r50-d8_4xb4-80k_ade20k-512x512

- In Collection: DeepLabV3

- Metadata:

- backbone: R-50-D8

- crop size: (512,512)

- lr schd: 80000

- inference time (ms/im):

- - value: 67.75

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (512,512)

- Training Memory (GB): 8.9

- Results:

- - Task: Semantic Segmentation

- Dataset: ADE20K

- Metrics:

- mIoU: 42.42

- mIoU(ms+flip): 43.28

- Config: configs/deeplabv3/deeplabv3_r50-d8_4xb4-80k_ade20k-512x512.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_80k_ade20k/deeplabv3_r50-d8_512x512_80k_ade20k_20200614_185028-0bb3f844.pth

-- Name: deeplabv3_r101-d8_4xb4-80k_ade20k-512x512

- In Collection: DeepLabV3

- Metadata:

- backbone: R-101-D8

- crop size: (512,512)

- lr schd: 80000

- inference time (ms/im):

- - value: 98.62

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (512,512)

- Training Memory (GB): 12.4

- Results:

- - Task: Semantic Segmentation

- Dataset: ADE20K

- Metrics:

- mIoU: 44.08

- mIoU(ms+flip): 45.19

- Config: configs/deeplabv3/deeplabv3_r101-d8_4xb4-80k_ade20k-512x512.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_80k_ade20k/deeplabv3_r101-d8_512x512_80k_ade20k_20200615_021256-d89c7fa4.pth

-- Name: deeplabv3_r50-d8_4xb4-160k_ade20k-512x512

- In Collection: DeepLabV3

- Metadata:

- backbone: R-50-D8

- crop size: (512,512)

- lr schd: 160000

- Results:

- - Task: Semantic Segmentation

- Dataset: ADE20K

- Metrics:

- mIoU: 42.66

- mIoU(ms+flip): 44.09

- Config: configs/deeplabv3/deeplabv3_r50-d8_4xb4-160k_ade20k-512x512.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_160k_ade20k/deeplabv3_r50-d8_512x512_160k_ade20k_20200615_123227-5d0ee427.pth

-- Name: deeplabv3_r101-d8_4xb4-160k_ade20k-512x512

- In Collection: DeepLabV3

- Metadata:

- backbone: R-101-D8

- crop size: (512,512)

- lr schd: 160000

- Results:

- - Task: Semantic Segmentation

- Dataset: ADE20K

- Metrics:

- mIoU: 45.0

- mIoU(ms+flip): 46.66

- Config: configs/deeplabv3/deeplabv3_r101-d8_4xb4-160k_ade20k-512x512.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_160k_ade20k/deeplabv3_r101-d8_512x512_160k_ade20k_20200615_105816-b1f72b3b.pth

-- Name: deeplabv3_r50-d8_4xb4-20k_voc12aug-512x512

- In Collection: DeepLabV3

- Metadata:

- backbone: R-50-D8

- crop size: (512,512)

- lr schd: 20000

- inference time (ms/im):

- - value: 72.05

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (512,512)

- Training Memory (GB): 6.1

- Results:

- - Task: Semantic Segmentation

- Dataset: Pascal VOC 2012 + Aug

- Metrics:

- mIoU: 76.17

- mIoU(ms+flip): 77.42

- Config: configs/deeplabv3/deeplabv3_r50-d8_4xb4-20k_voc12aug-512x512.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_20k_voc12aug/deeplabv3_r50-d8_512x512_20k_voc12aug_20200617_010906-596905ef.pth

-- Name: deeplabv3_r101-d8_4xb4-20k_voc12aug-512x512

- In Collection: DeepLabV3

- Metadata:

- backbone: R-101-D8

- crop size: (512,512)

- lr schd: 20000

- inference time (ms/im):

- - value: 101.94

- hardware: V100

- backend: PyTorch

- batch size: 1

- mode: FP32

- resolution: (512,512)

- Training Memory (GB): 9.6

- Results:

- - Task: Semantic Segmentation

- Dataset: Pascal VOC 2012 + Aug

- Metrics:

- mIoU: 78.7

- mIoU(ms+flip): 79.95

- Config: configs/deeplabv3/deeplabv3_r101-d8_4xb4-20k_voc12aug-512x512.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r101-d8_512x512_20k_voc12aug/deeplabv3_r101-d8_512x512_20k_voc12aug_20200617_010932-8d13832f.pth

-- Name: deeplabv3_r50-d8_4xb4-40k_voc12aug-512x512

- In Collection: DeepLabV3

- Metadata:

- backbone: R-50-D8

- crop size: (512,512)

- lr schd: 40000

- Results:

- - Task: Semantic Segmentation

- Dataset: Pascal VOC 2012 + Aug

- Metrics:

- mIoU: 77.68

- mIoU(ms+flip): 78.78

- Config: configs/deeplabv3/deeplabv3_r50-d8_4xb4-40k_voc12aug-512x512.py

- Weights: https://download.openmmlab.com/mmsegmentation/v0.5/deeplabv3/deeplabv3_r50-d8_512x512_40k_voc12aug/deeplabv3_r50-d8_512x512_40k_voc12aug_20200613_161546-2ae96e7e.pth

-- Name: deeplabv3_r101-d8_4xb4-40k_voc12aug-512x512

- In Collection: DeepLabV3

- Metadata:

- backbone: R-101-D8

- crop size: (512,512)

- lr schd: 40000

- Results:

- - Task: Semantic Segmentation

- Dataset: Pascal VOC 2012 + Aug

- Metrics: