Deformable DETR: Deformable Transformers for End-to-End Object Detection

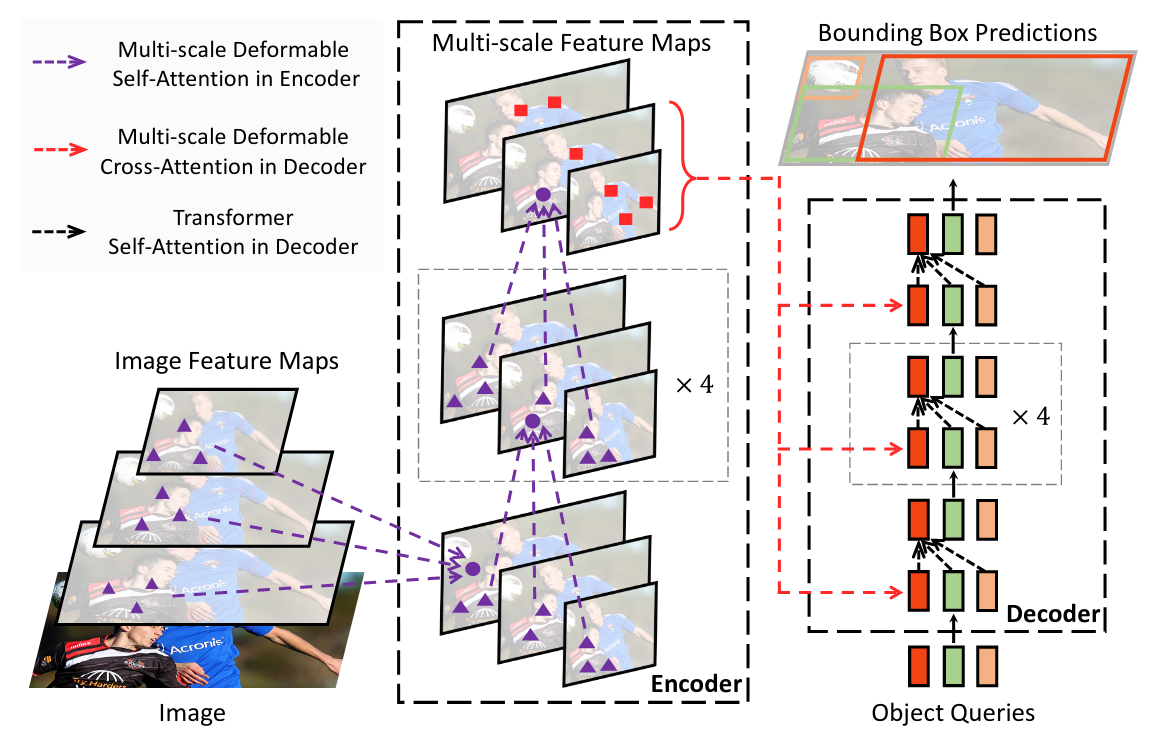

DETR has been recently proposed to eliminate the need for many hand-designed components in object detection while demonstrating good performance. However, it suffers from slow convergence and limited feature spatial resolution, due to the limitation of Transformer attention modules in processing image feature maps. To mitigate these issues, we proposed Deformable DETR, whose attention modules only attend to a small set of key sampling points around a reference. Deformable DETR can achieve better performance than DETR (especially on small objects) with 10 times less training epochs. Extensive experiments on the COCO benchmark demonstrate the effectiveness of our approach.

| Backbone | Model | Lr schd | box AP | Config | Download |

|---|---|---|---|---|---|

| R-50 | Deformable DETR | 50e | 44.5 | config | model | log |

| R-50 | + iterative bounding box refinement | 50e | 46.1 | config | model | log |

| R-50 | ++ two-stage Deformable DETR | 50e | 46.8 | config | model | log |

- All models are trained with batch size 32.

- The performance is unstable.

Deformable DETRanditerative bounding box refinementmay fluctuate about 0.3 mAP.two-stage Deformable DETRmay fluctuate about 0.2 mAP.

We provide the config files for Deformable DETR: Deformable DETR: Deformable Transformers for End-to-End Object Detection.

@inproceedings{

zhu2021deformable,

title={Deformable DETR: Deformable Transformers for End-to-End Object Detection},

author={Xizhou Zhu and Weijie Su and Lewei Lu and Bin Li and Xiaogang Wang and Jifeng Dai},

booktitle={International Conference on Learning Representations},

year={2021},

url={https://openreview.net/forum?id=gZ9hCDWe6ke}

}